Regularization And Normalization For Generative Adversarial Networks: A Review

Paper and Code

Aug 19, 2020

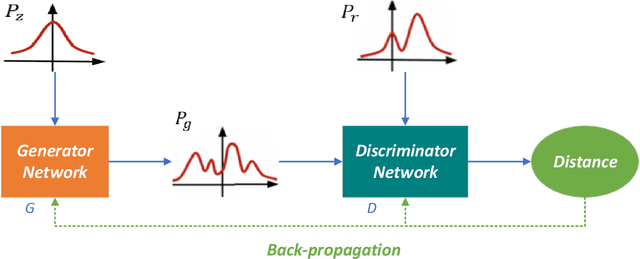

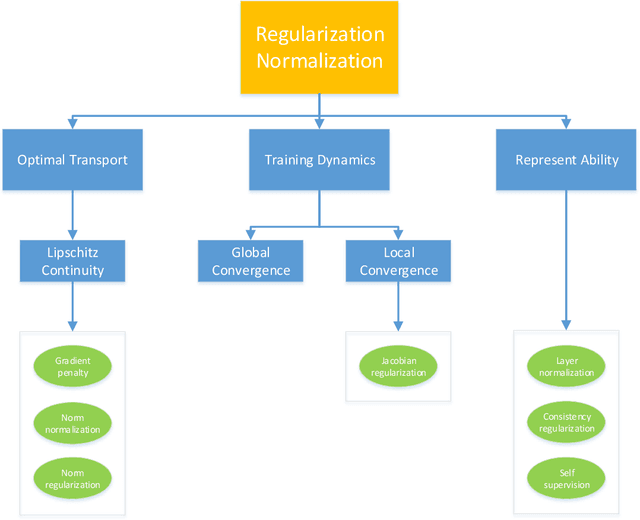

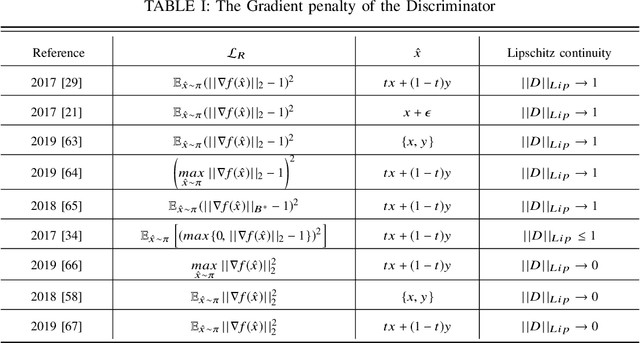

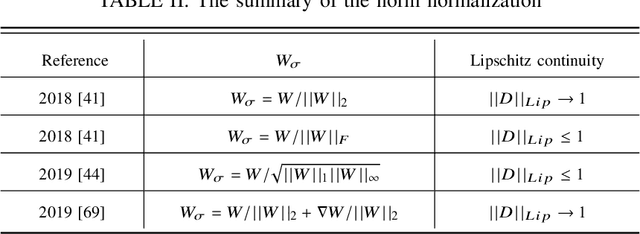

Generative adversarial networks(GANs) is a popular generative model. With the development of the deep network, its application is more and more widely. By now, people think that the training of GANs is a two-person zero-sum game(discriminator and generator). The lack of strong supervision information makes the training very difficult, such as non-convergence, mode collapses, gradient disappearance, and the sensitivity of hyperparameters. As we all know, regularization and normalization are commonly used for stability training. This paper reviews and summarizes the research in the regularization and normalization for GAN. All the methods are classified into six groups: Gradient penalty, Norm normalization and regularization, Jacobian regularization, Layer normalization, Consistency regularization, and Self-supervision.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge