Regional Attention with Architecture-Rebuilt 3D Network for RGB-D Gesture Recognition

Paper and Code

Mar 09, 2021

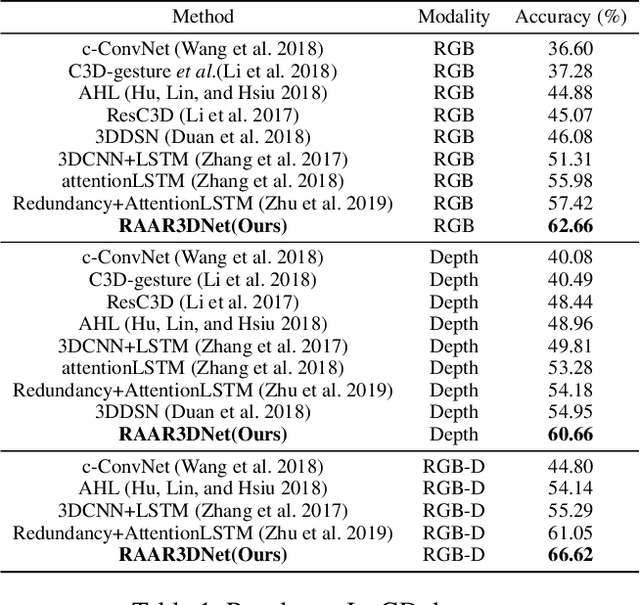

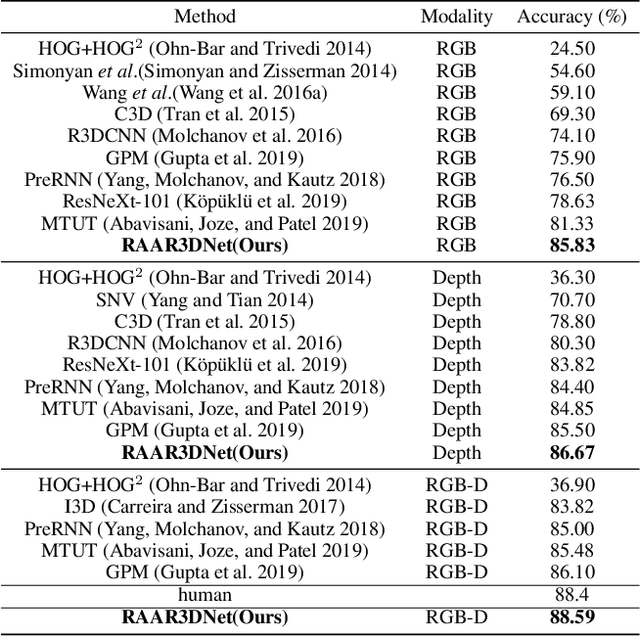

Human gesture recognition has drawn much attention in the area of computer vision. However, the performance of gesture recognition is always influenced by some gesture-irrelevant factors like the background and the clothes of performers. Therefore, focusing on the regions of hand/arm is important to the gesture recognition. Meanwhile, a more adaptive architecture-searched network structure can also perform better than the block-fixed ones like Resnet since it increases the diversity of features in different stages of the network better. In this paper, we propose a regional attention with architecture-rebuilt 3D network (RAAR3DNet) for gesture recognition. We replace the fixed Inception modules with the automatically rebuilt structure through the network via Neural Architecture Search (NAS), owing to the different shape and representation ability of features in the early, middle, and late stage of the network. It enables the network to capture different levels of feature representations at different layers more adaptively. Meanwhile, we also design a stackable regional attention module called dynamic-static Attention (DSA), which derives a Gaussian guidance heatmap and dynamic motion map to highlight the hand/arm regions and the motion information in the spatial and temporal domains, respectively. Extensive experiments on two recent large-scale RGB-D gesture datasets validate the effectiveness of the proposed method and show it outperforms state-of-the-art methods. The codes of our method are available at: https://github.com/zhoubenjia/RAAR3DNet.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge