Refined approachability algorithms and application to regret minimization with global costs

Paper and Code

Sep 15, 2020

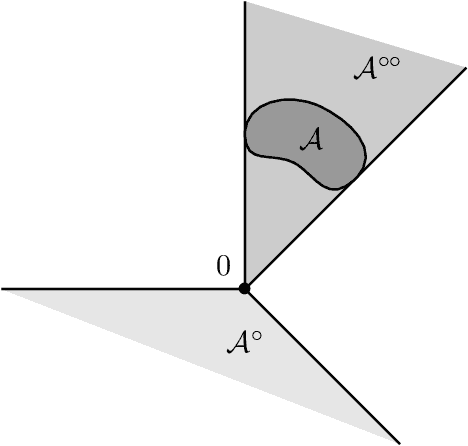

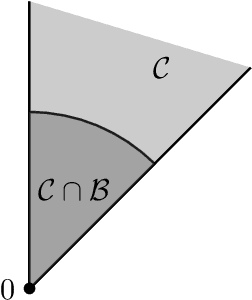

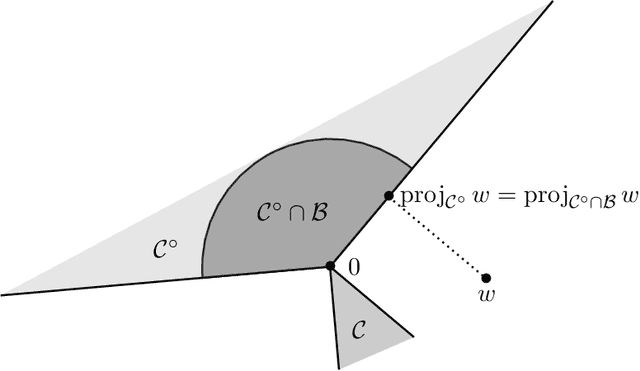

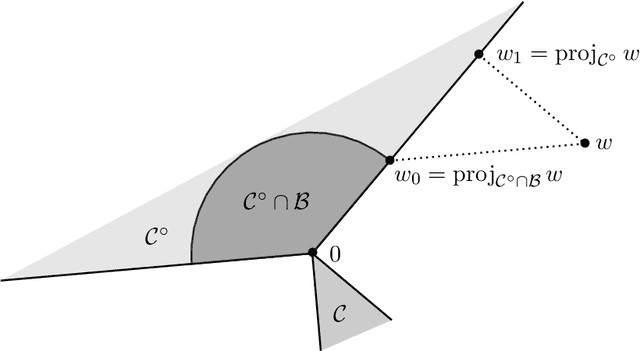

Blackwell's approachability is a framework where two players, the Decision Maker and the Environment, play a repeated game with vector-valued payoffs. The goal of the Decision Maker is to make the average payoff converge to a given set called the target. When this is indeed possible, simple algorithms which guarantee the convergence are known. This abstract tool was successfully used for the construction of optimal strategies in various repeated games, but also found several applications in online learning. By extending an approach proposed by Abernethy et al. (2011), we construct and analyze a class of Follow the Regularized Leader algorithms (FTRL) for Blackwell's approachability which are able to minimize not only the Euclidean distance to the target set (as it is often the case in the context of Blackwell's approachability) but a wide range of distance-like quantities. This flexibility enables us to apply these algorithms to minimize the exact quantity of interest in various online learning problems. In particular, for regret minimization with global costs, we obtain novel guarantees for general norm cost functions, and for the case of $\ell_p$ cost functions, we obtain the first regret bounds with explicit dependence in $p$ and the dimension $d$.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge