Recurrent Neural Networks: An Embedded Computing Perspective

Paper and Code

Jul 23, 2019

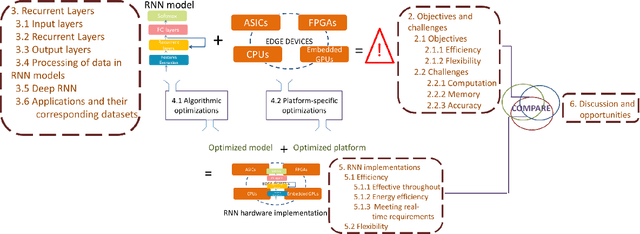

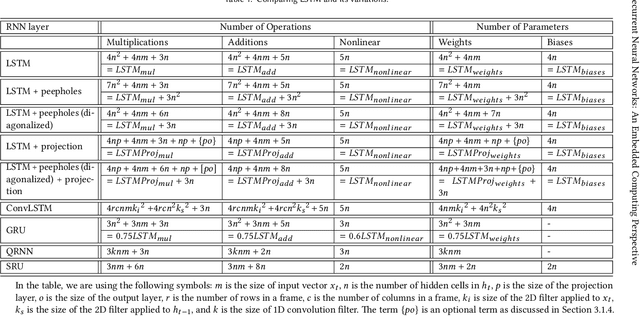

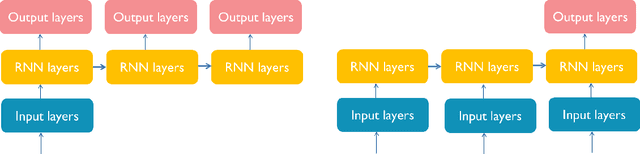

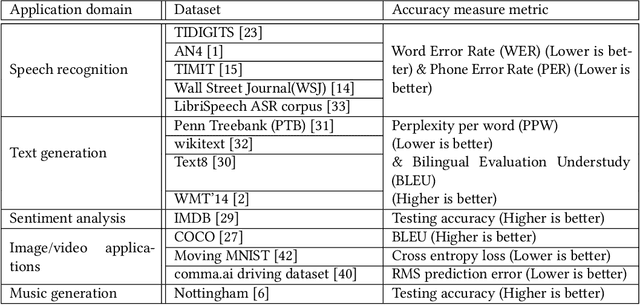

Recurrent Neural Networks (RNNs) are a class of machine learning algorithms used for applications with time-series and sequential data. Recently, a strong interest has emerged to execute RNNs on embedded devices. However, RNN requirements of high computational capability and large memory space is difficult to be met. In this paper, we review the existing implementations of RNN models on embedded platforms and discuss the methods adopted to overcome the limitations of embedded systems. We define the objectives of mapping RNN algorithms on embedded platforms and the challenges facing their realization. Then, we explain the components of RNNs models from an implementation perspective. Furthermore, we discuss the optimizations applied on RNNs to run efficiently on embedded platforms. Additionally, we compare the defined objectives with the implementations and highlight some open research questions and aspects currently not addressed for embedded RNNs. The paper concludes that applying algorithmic optimizations on RNN models is vital while designing an embedded solution. In addition, using the on-chip memory to store the weights or having an efficient compute-load overlap is essential to overcome the high memory access overhead. Nevertheless, the survey concludes that high performance has been targeted by many implementations while flexibility was still less attempted.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge