Really should we pruning after model be totally trained? Pruning based on a small amount of training

Paper and Code

Jan 24, 2019

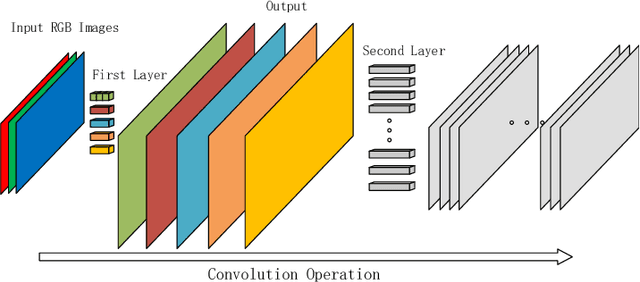

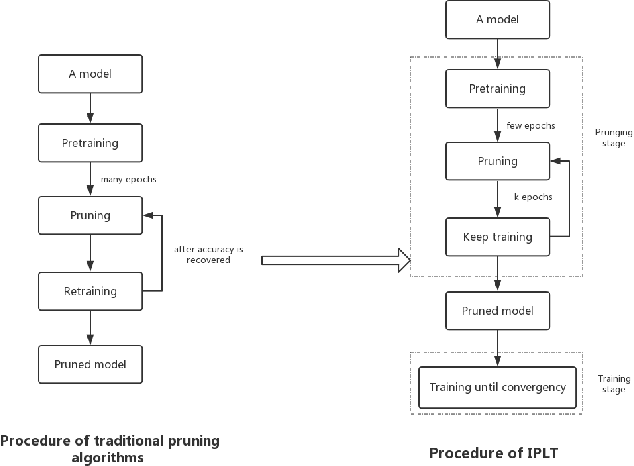

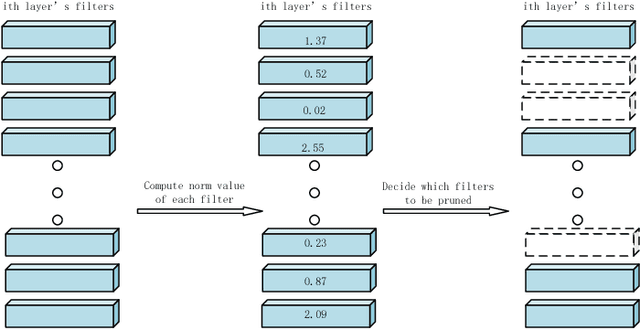

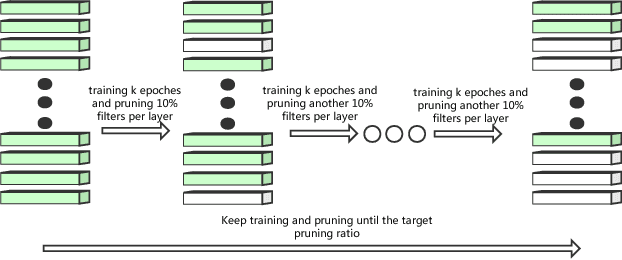

Pre-training of models in pruning algorithms plays an important role in pruning decision-making. We find that excessive pre-training is not necessary for pruning algorithms. According to this idea, we propose a pruning algorithm---Incremental pruning based on less training (IPLT). Compared with the traditional pruning algorithm based on a large number of pre-training, IPLT has competitive compression effect than the traditional pruning algorithm under the same simple pruning strategy. On the premise of ensuring accuracy, IPLT can achieve 8x-9x compression for VGG-19 on CIFAR-10 and only needs to pre-train few epochs. For VGG-19 on CIFAR-10, we can not only achieve 10 times test acceleration, but also about 10 times training acceleration. At present, the research mainly focuses on the compression and acceleration in the application stage of the model, while the compression and acceleration in the training stage are few. We newly proposed a pruning algorithm that can compress and accelerate in the training stage. It is novel to consider the amount of pre-training required by pruning algorithm. Our results have implications: Too much pre-training may be not necessary for pruning algorithms.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge