Real-time Surface Deformation Recovery from Stereo Videos

Paper and Code

Jul 16, 2020

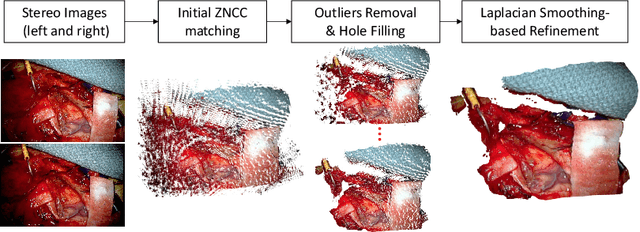

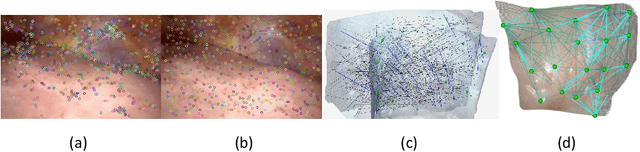

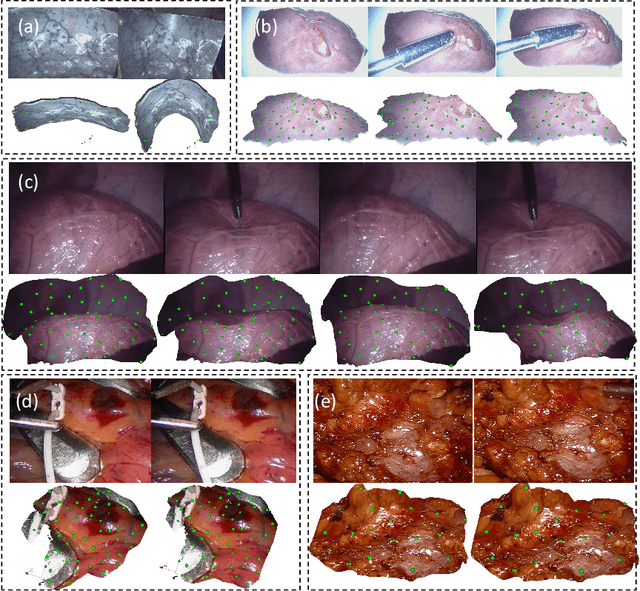

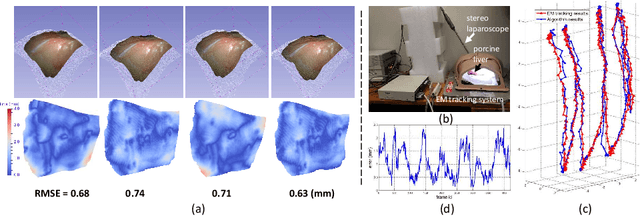

Tissue deformation during the surgery may significantly decrease the accuracy of surgical navigation systems. In this paper, we propose an approach to estimate the deformation of tissue surface from stereo videos in real-time, which is capable of handling occlusion, smooth surface and fast deformation. We first use a stereo matching method to extract depth information from stereo video frames and generate the tissue template, and then estimate the deformation of the obtained template by minimizing ICP, ORB feature matching and as-rigid-as-possible (ARAP) costs. The main novelties are twofold: (1) Due to non-rigid deformation, feature matching outliers are difficult to be removed by traditional RANSAC methods; therefore we propose a novel 1-point RANSAC and reweighting method to preselect matching inliers, which handles smooth surfaces and fast deformations. (2) We propose a novel ARAP cost function based on dense connections between the control points to achieve better smoothing performance with limited number of iterations. Algorithms are designed and implemented for GPU parallel computing. Experiments on ex- and in vivo data showed that this approach works at an update rate of 15Hz with an accuracy of less than 2.5 mm on a NVIDIA Titan X GPU.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge