Real-Time, Flight-Ready, Non-Cooperative Spacecraft Pose Estimation Using Monocular Imagery

Paper and Code

Jan 23, 2021

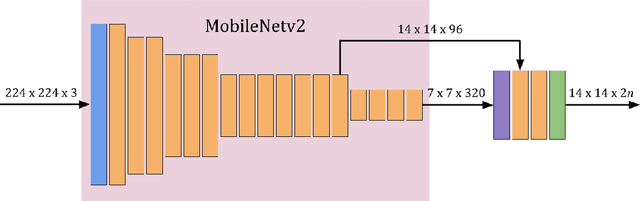

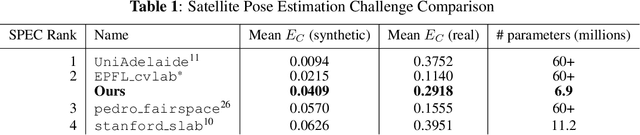

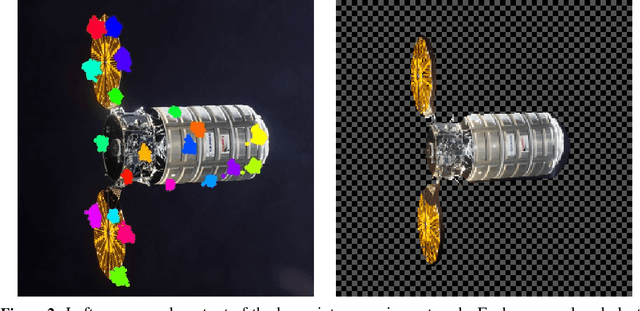

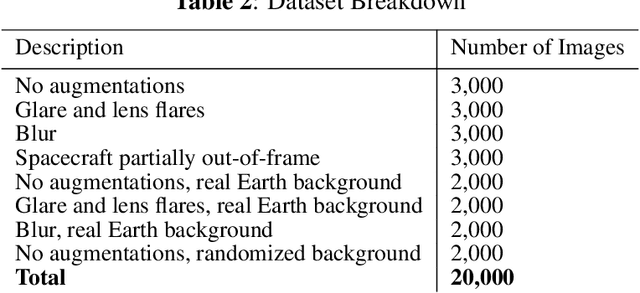

A key requirement for autonomous on-orbit proximity operations is the estimation of a target spacecraft's relative pose (position and orientation). It is desirable to employ monocular cameras for this problem due to their low cost, weight, and power requirements. This work presents a novel convolutional neural network (CNN)-based monocular pose estimation system that achieves state-of-the-art accuracy with low computational demand. In combination with a Blender-based synthetic data generation scheme, the system demonstrates the ability to generalize from purely synthetic training data to real in-space imagery of the Northrop Grumman Enhanced Cygnus spacecraft. Additionally, the system achieves real-time performance on low-power flight-like hardware.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge