Random Ensemble Reinforcement Learning for Traffic Signal Control

Paper and Code

Mar 10, 2022

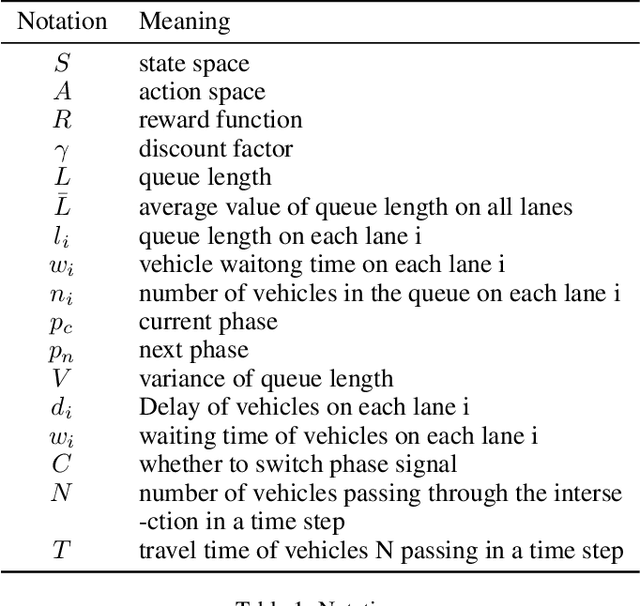

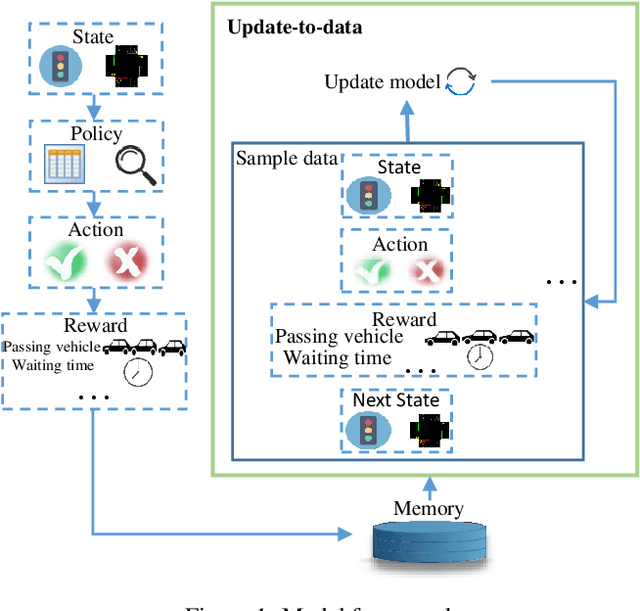

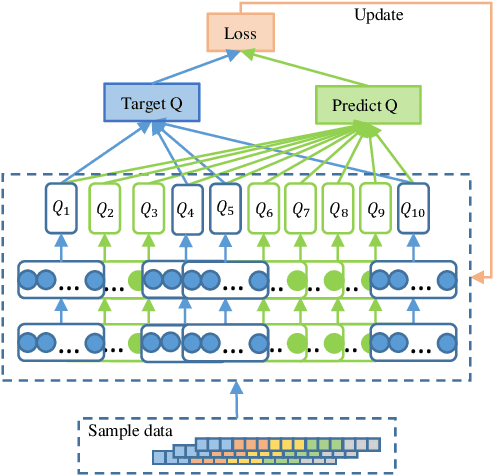

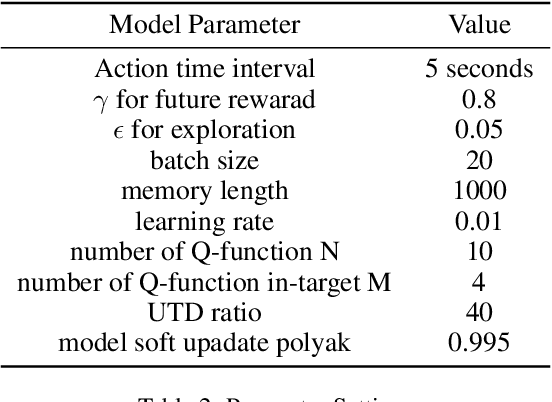

Traffic signal control is a significant part of the construction of intelligent transportation. An efficient traffic signal control strategy can reduce traffic congestion, improve urban road traffic efficiency and facilitate people's lives. Existing reinforcement learning approaches for traffic signal control mainly focus on learning through a separate neural network. Such an independent neural network may fall into the local optimum of the training results. Worse more, the collected data can only be sampled once, so the data utilization rate is low. Therefore, we propose the Random Ensemble Double DQN Light (RELight) model. It can dynamically learn traffic signal control strategies through reinforcement learning and combine random ensemble learning to avoid falling into the local optimum to reach the optimal strategy. Moreover, we introduce the Update-To-Data (UTD) ratio to control the number of data reuses to improve the problem of low data utilization. In addition, we have conducted sufficient experiments on synthetic data and real-world data to prove that our proposed method can achieve better traffic signal control effects than the existing optimal methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge