Rainproof: An Umbrella To Shield Text Generators From Out-Of-Distribution Data

Paper and Code

Dec 18, 2022

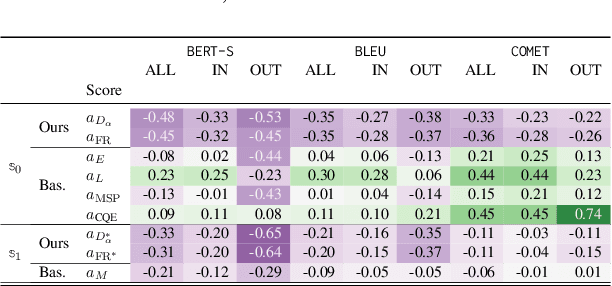

As more and more conversational and translation systems are deployed in production, it is essential to implement and to develop effective control mechanisms guaranteeing their proper functioning and security. An essential component to ensure safe system behavior is out-of-distribution (OOD) detection, which aims at detecting whether an input sample is statistically far from the training distribution. Although OOD detection is a widely covered topic in classification tasks, it has received much less attention in text generation. This paper addresses the problem of OOD detection for machine translation and dialog generation from an operational perspective. Our contributions include: (i) RAINPROOF a Relative informAItioN Projection ODD detection framework; and (ii) a more operational evaluation setting for OOD detection. Surprisingly, we find that OOD detection is not necessarily aligned with task-specific measures. The OOD detector may filter out samples that are well processed by the model and keep samples that are not, leading to weaker performance. Our results show that RAINPROOF breaks this curse and achieve good results in OOD detection while increasing performance.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge