R.I.P.: A Simple Black-box Attack on Continual Test-time Adaptation

Paper and Code

Dec 02, 2024

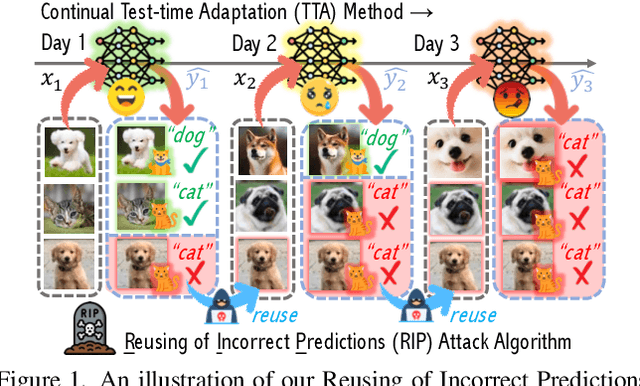

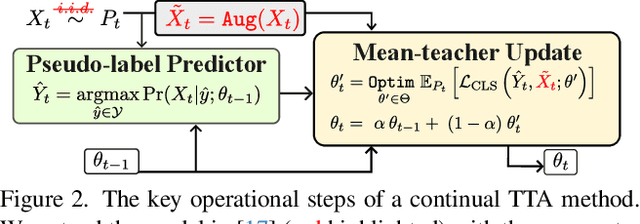

Test-time adaptation (TTA) has emerged as a promising solution to tackle the continual domain shift in machine learning by allowing model parameters to change at test time, via self-supervised learning on unlabeled testing data. At the same time, it unfortunately opens the door to unforeseen vulnerabilities for degradation over time. Through a simple theoretical continual TTA model, we successfully identify a risk in the sampling process of testing data that could easily degrade the performance of a continual TTA model. We name this risk as Reusing of Incorrect Prediction (RIP) that TTA attackers can employ or as a result of the unintended query from general TTA users. The risk posed by RIP is also highly realistic, as it does not require prior knowledge of model parameters or modification of testing samples. This simple requirement makes RIP as the first black-box TTA attack algorithm that stands out from existing white-box attempts. We extensively benchmark the performance of the most recent continual TTA approaches when facing the RIP attack, providing insights on its success, and laying out potential roadmaps that could enhance the resilience of future continual TTA systems.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge