R-GCN: The R Could Stand for Random

Paper and Code

Mar 04, 2022

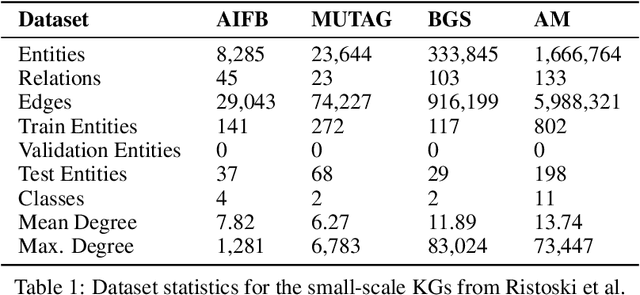

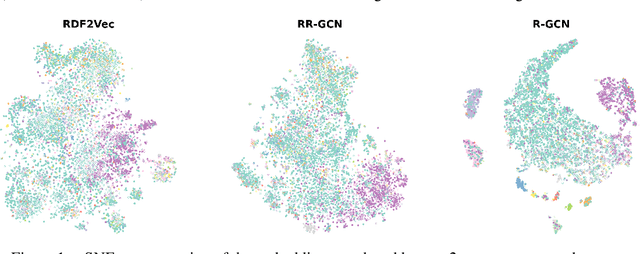

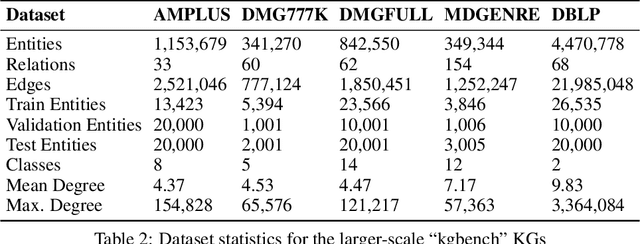

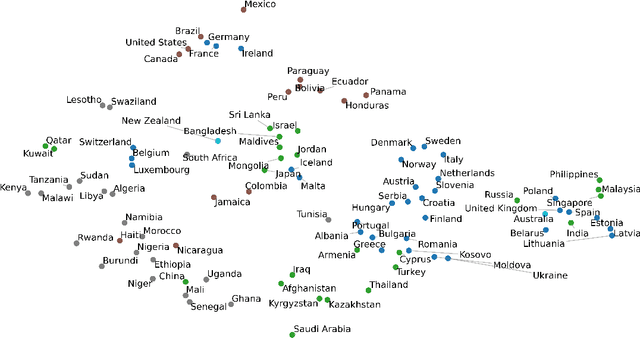

The inception of Relational Graph Convolutional Networks (R-GCNs) marked a milestone in the Semantic Web domain as it allows for end-to-end training of machine learning models that operate on Knowledge Graphs (KGs). R-GCNs generate a representation for a node of interest by repeatedly aggregating parametrised, relation-specific transformations of its neighbours. However, in this paper, we argue that the the R-GCN's main contribution lies in this "message passing" paradigm, rather than the learned parameters. To this end, we introduce the "Random Relational Graph Convolutional Network" (RR-GCN), which constructs embeddings for nodes in the KG by aggregating randomly transformed random information from neigbours, i.e., with no learned parameters. We empirically show that RR-GCNs can compete with fully trained R-GCNs in both node classification and link prediction settings. The implications of these results are two-fold: on the one hand, our technique can be used as a quick baseline that novel KG embedding methods should be able to beat. On the other hand, it demonstrates that further research might reveal more parameter-efficient inductive biases for KGs.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge