Quantum Algorithms and Lower Bounds for Linear Regression with Norm Constraints

Paper and Code

Oct 25, 2021

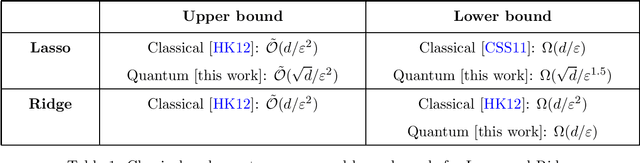

Lasso and Ridge are important minimization problems in machine learning and statistics. They are versions of linear regression with squared loss where the vector $\theta\in\mathbb{R}^d$ of coefficients is constrained in either $\ell_1$-norm (for Lasso) or in $\ell_2$-norm (for Ridge). We study the complexity of quantum algorithms for finding $\varepsilon$-minimizers for these minimization problems. We show that for Lasso we can get a quadratic quantum speedup in terms of $d$ by speeding up the cost-per-iteration of the Frank-Wolfe algorithm, while for Ridge the best quantum algorithms are linear in $d$, as are the best classical algorithms.

* 32 pages LaTeX

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge