Quantification of BERT Diagnosis Generalizability Across Medical Specialties Using Semantic Dataset Distance

Paper and Code

Aug 20, 2020

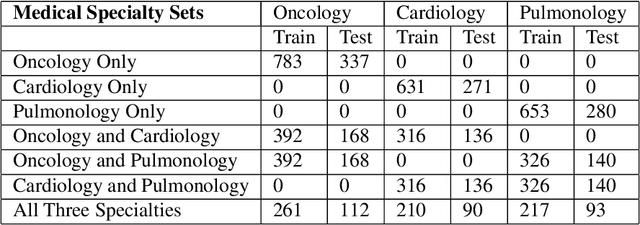

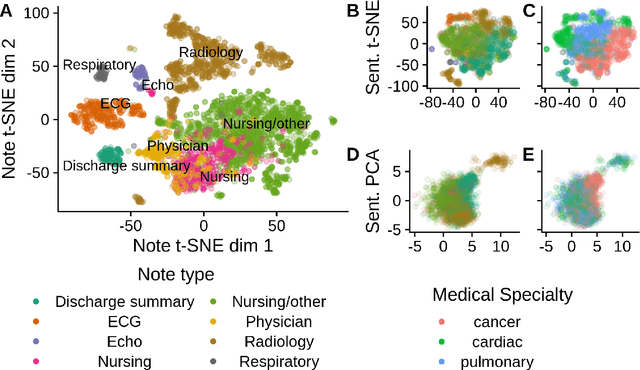

Deep learning models in healthcare may fail to generalize on data from unseen corpora. Additionally, no quantitative metric exists to tell how existing models will perform on new data. Previous studies demonstrated that NLP models of medical notes generalize variably between institutions, but ignored other levels of healthcare organization. We measured SciBERT diagnosis sentiment classifier generalizability between medical specialties using EHR sentences from MIMIC-III. Models trained on one specialty performed better on internal test sets than mixed or external test sets (mean AUCs 0.92, 0.87, and 0.83, respectively; p = 0.016). When models are trained on more specialties, they have better test performances (p < 1e-4). Model performance on new corpora is directly correlated to the similarity between train and test sentence content (p < 1e-4). Future studies should assess additional axes of generalization to ensure deep learning models fulfil their intended purpose across institutions, specialties, and practices.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge