Quality Map Fusion for Adversarial Learning

Paper and Code

Oct 24, 2021

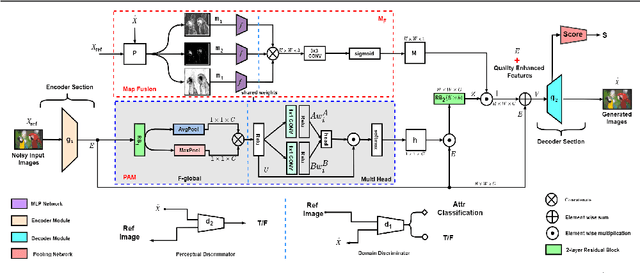

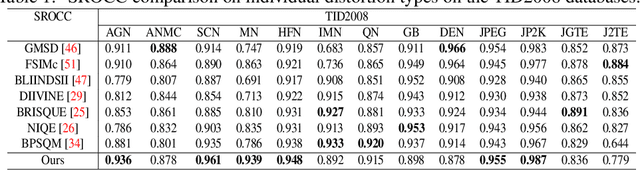

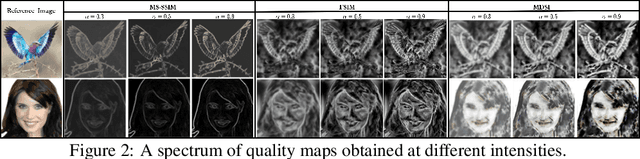

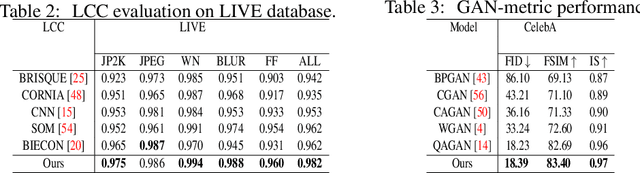

Generative adversarial models that capture salient low-level features which convey visual information in correlation with the human visual system (HVS) still suffer from perceptible image degradations. The inability to convey such highly informative features can be attributed to mode collapse, convergence failure and vanishing gradients. In this paper, we improve image quality adversarially by introducing a novel quality map fusion technique that harnesses image features similar to the HVS and the perceptual properties of a deep convolutional neural network (DCNN). We extend the widely adopted l2 Wasserstein distance metric to other preferable quality norms derived from Banach spaces that capture richer image properties like structure, luminance, contrast and the naturalness of images. We also show that incorporating a perceptual attention mechanism (PAM) that extracts global feature embeddings from the network bottleneck with aggregated perceptual maps derived from standard image quality metrics translate to a better image quality. We also demonstrate impressive performance over other methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge