QU-net++: Image Quality Detection Framework for Segmentation of 3D Medical Image Stacks

Paper and Code

Oct 27, 2021

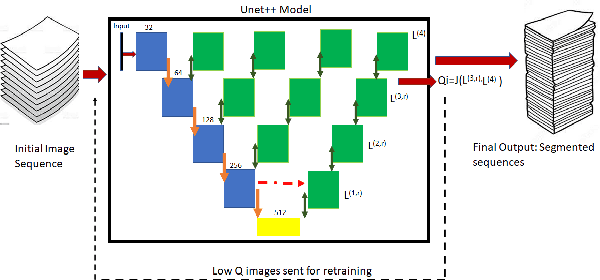

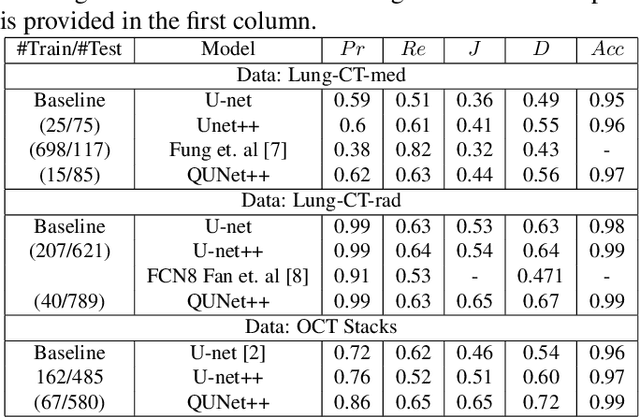

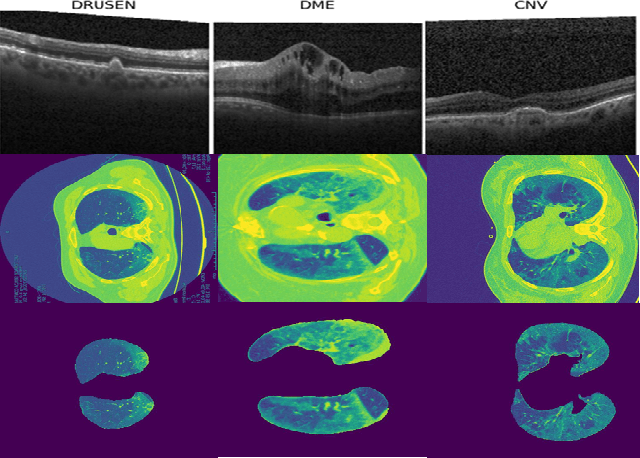

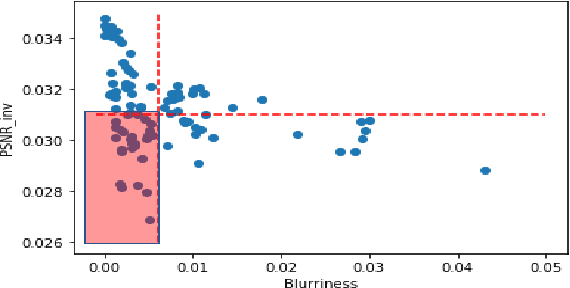

Automated segmentation of pathological regions of interest has been shown to aid prognosis and follow up treatment. However, accurate pathological segmentations require high quality of annotated data that can be both cost and time intensive to generate. In this work, we propose an automated two-step method that evaluates the quality of medical images from 3D image stacks using a U-net++ model, such that images that can aid further training of the U-net++ model can be detected based on the disagreement in segmentations produced from the final two layers. Images thus detected can then be used to further fine tune the U-net++ model for semantic segmentation. The proposed QU-net++ model isolates around 10\% of images per 3D stack and can scale across imaging modalities to segment cysts in OCT images and ground glass opacity in Lung CT images with Dice cores in the range 0.56-0.72. Thus, the proposed method can be applied for multi-modal binary segmentation of pathology.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge