PruneNet: Channel Pruning via Global Importance

Paper and Code

May 22, 2020

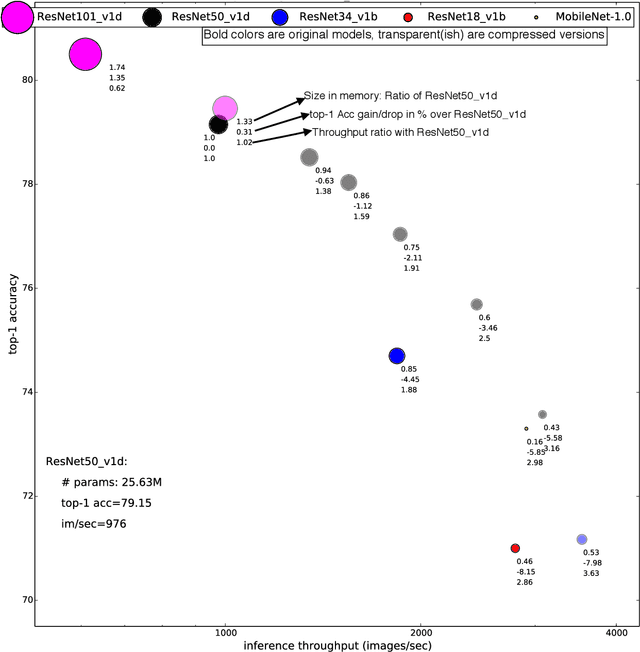

Channel pruning is one of the predominant approaches for accelerating deep neural networks. Most existing pruning methods either train from scratch with a sparsity inducing term such as group lasso, or prune redundant channels in a pretrained network and then fine tune the network. Both strategies suffer from some limitations: the use of group lasso is computationally expensive, difficult to converge and often suffers from worse behavior due to the regularization bias. The methods that start with a pretrained network either prune channels uniformly across the layers or prune channels based on the basic statistics of the network parameters. These approaches either ignore the fact that some CNN layers are more redundant than others or fail to adequately identify the level of redundancy in different layers. In this work, we investigate a simple-yet-effective method for pruning channels based on a computationally light-weight yet effective data driven optimization step that discovers the necessary width per layer. Experiments conducted on ILSVRC-$12$ confirm effectiveness of our approach. With non-uniform pruning across the layers on ResNet-$50$, we are able to match the FLOP reduction of state-of-the-art channel pruning results while achieving a $0.98\%$ higher accuracy. Further, we show that our pruned ResNet-$50$ network outperforms ResNet-$34$ and ResNet-$18$ networks, and that our pruned ResNet-$101$ outperforms ResNet-$50$.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge