Prudence When Assuming Normality: an advice for machine learning practitioners

Paper and Code

Aug 14, 2019

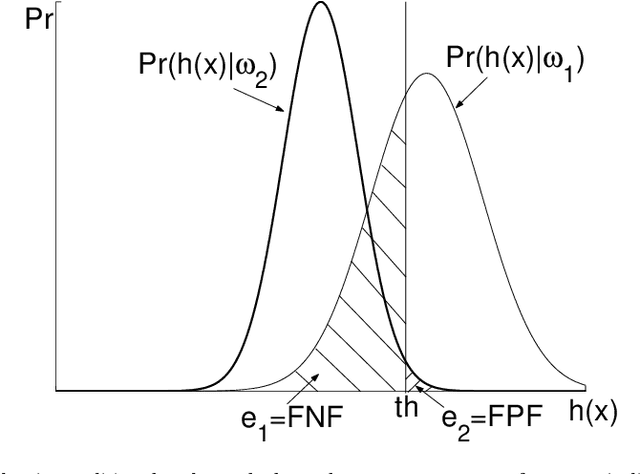

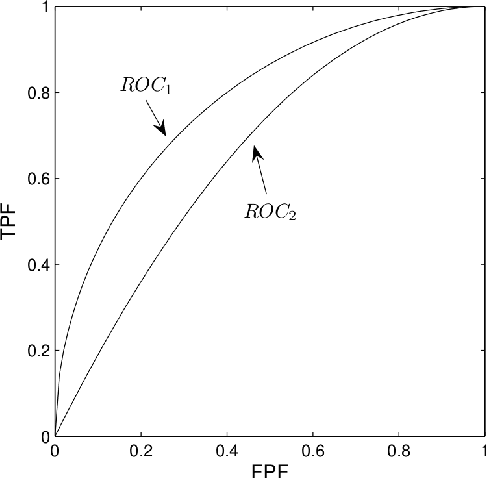

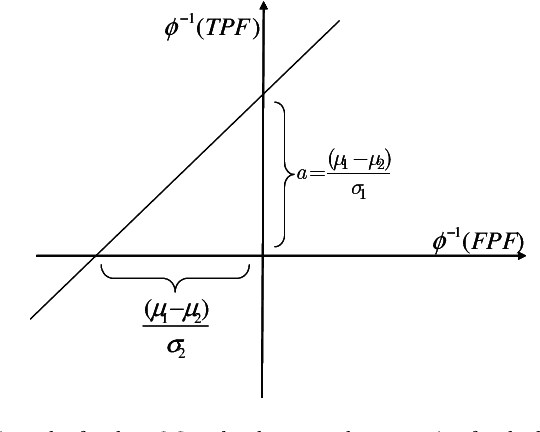

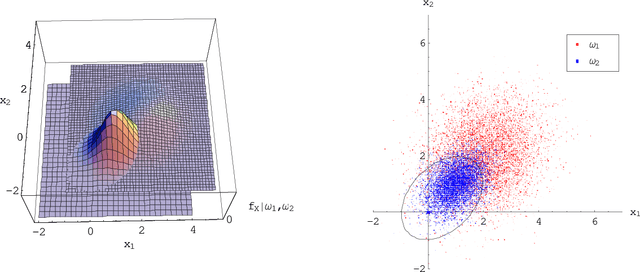

In a binary classification problem the feature vector (predictor) is the input to a scoring function that produces a decision value (score), which is compared to a particular chosen threshold to provide a final class prediction (output). Although the normal assumption of the scoring function is important in many applications, sometimes it is severely violated even under the simple multinormal assumption of the feature vector. This article proves this result mathematically with a counter example to provide an advice for practitioners to avoid blind assumptions of normality. On the other hand, the article provides a set of experiments that illustrate some of the expected and well-behaved results of the Area Under the ROC curve (AUC) under the multinormal assumption of the feature vector. Therefore, the message of the article is not to avoid the normal assumption of either the input feature vector or the output scoring function; however, a prudence is needed when adopting either of both.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge