Provably efficient, succinct, and precise explanations

Paper and Code

Nov 01, 2021

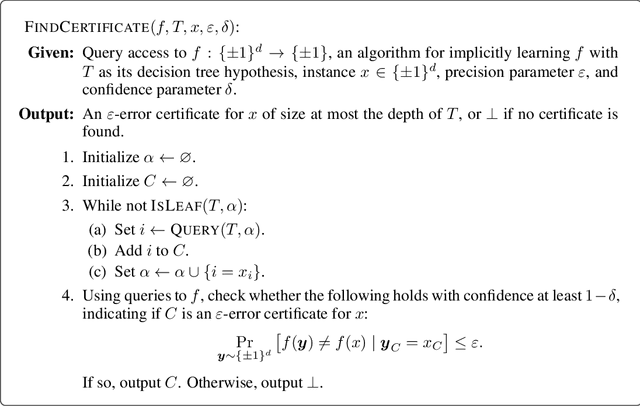

We consider the problem of explaining the predictions of an arbitrary blackbox model $f$: given query access to $f$ and an instance $x$, output a small set of $x$'s features that in conjunction essentially determines $f(x)$. We design an efficient algorithm with provable guarantees on the succinctness and precision of the explanations that it returns. Prior algorithms were either efficient but lacked such guarantees, or achieved such guarantees but were inefficient. We obtain our algorithm via a connection to the problem of {\sl implicitly} learning decision trees. The implicit nature of this learning task allows for efficient algorithms even when the complexity of $f$ necessitates an intractably large surrogate decision tree. We solve the implicit learning problem by bringing together techniques from learning theory, local computation algorithms, and complexity theory. Our approach of "explaining by implicit learning" shares elements of two previously disparate methods for post-hoc explanations, global and local explanations, and we make the case that it enjoys advantages of both.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge