Provably Correct Training of Neural Network Controllers Using Reachability Analysis

Paper and Code

Feb 22, 2021

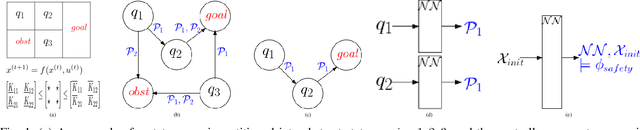

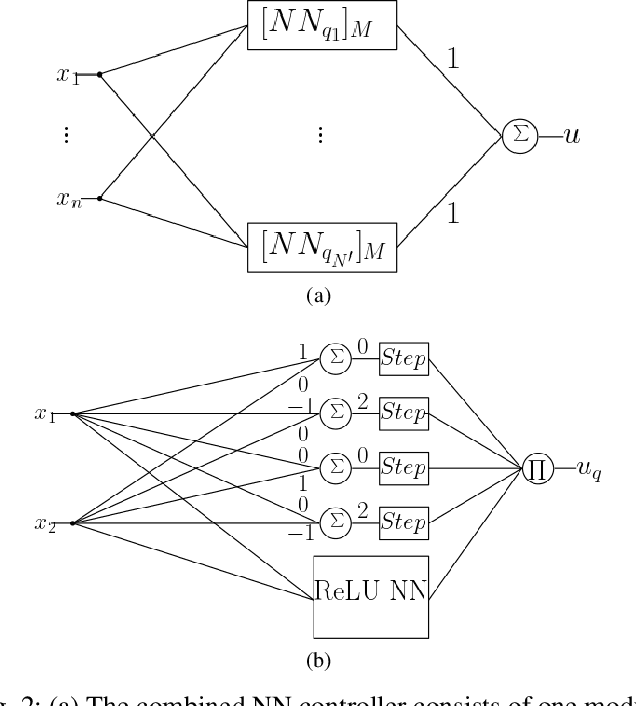

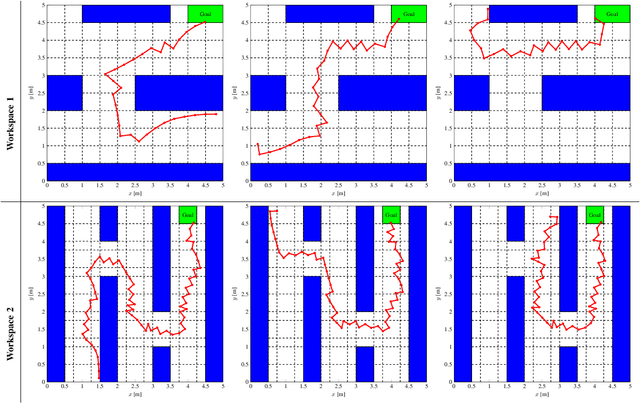

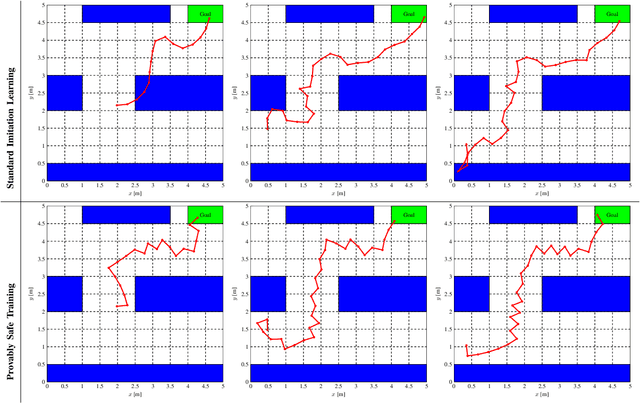

In this paper, we consider the problem of training neural network (NN) controllers for cyber-physical systems (CPS) that are guaranteed to satisfy safety and liveness properties. Our approach is to combine model-based design methodologies for dynamical systems with data-driven approaches to achieve this target. Given a mathematical model of the dynamical system, we compute a finite-state abstract model that captures the closed-loop behavior under all possible neural network controllers. Using this finite-state abstract model, our framework identifies the subset of NN weights that are guaranteed to satisfy the safety requirements. During training, we augment the learning algorithm with a NN weight projection operator that enforces the resulting NN to be provably safe. To account for the liveness properties, the proposed framework uses the finite-state abstract model to identify candidate NN weights that may satisfy the liveness properties. Using such candidate NN weights, the proposed framework biases the NN training to achieve the liveness specification. Achieving the guarantees above, can not be ensured without correctness guarantees on the NN architecture, which controls the NN's expressiveness. Therefore, and as a corner step in the proposed framework is the ability to select provably correct NN architectures automatically.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge