Probabilistic Models for Query Approximation with Large Sparse Binary Datasets

Paper and Code

Jan 16, 2013

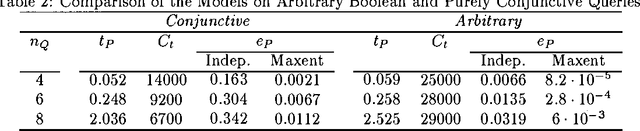

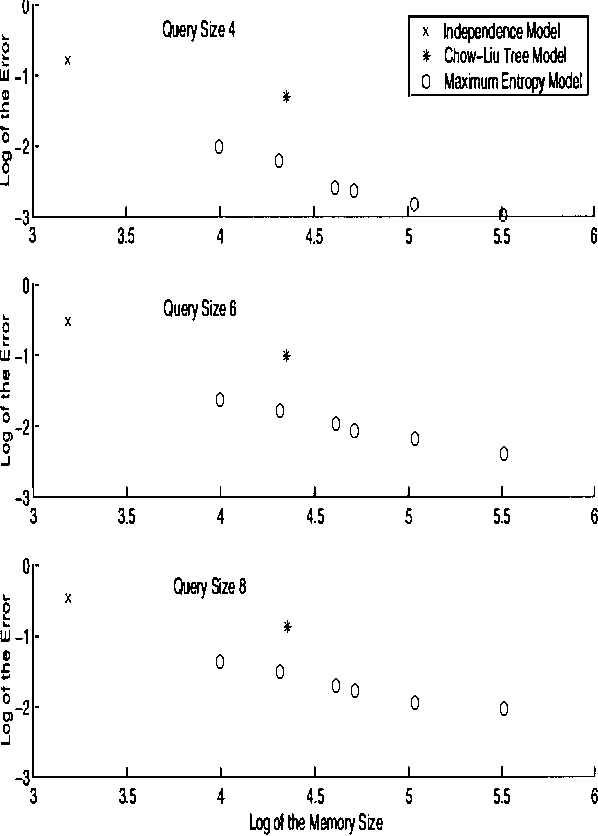

Large sparse sets of binary transaction data with millions of records and thousands of attributes occur in various domains: customers purchasing products, users visiting web pages, and documents containing words are just three typical examples. Real-time query selectivity estimation (the problem of estimating the number of rows in the data satisfying a given predicate) is an important practical problem for such databases. We investigate the application of probabilistic models to this problem. In particular, we study a Markov random field (MRF) approach based on frequent sets and maximum entropy, and compare it to the independence model and the Chow-Liu tree model. We find that the MRF model provides substantially more accurate probability estimates than the other methods but is more expensive from a computational and memory viewpoint. To alleviate the computational requirements we show how one can apply bucket elimination and clique tree approaches to take advantage of structure in the models and in the queries. We provide experimental results on two large real-world transaction datasets.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge