Probabilistic Metamodels for an Efficient Characterization of Complex Driving Scenarios

Paper and Code

Oct 07, 2021

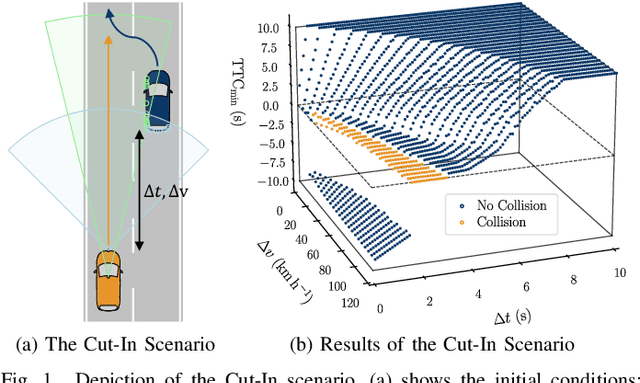

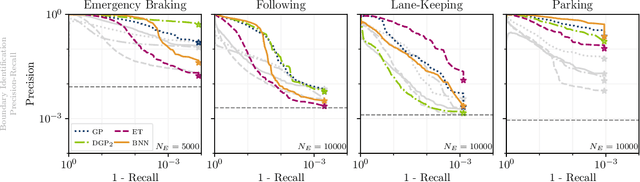

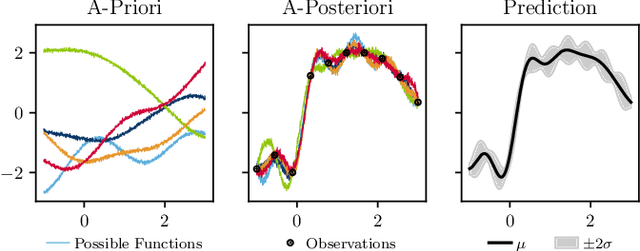

To systematically validate the safe behavior of automated vehicles (AV), the aim of scenario-based testing is to cluster the infinite situations an AV might encounter into a finite set of functional scenarios. Every functional scenario, however, can still manifest itself in a vast amount of variations. Thus, metamodels are often used to perform analyses or to select specific variations for examination. However, despite the safety criticalness of AV testing, metamodels are usually seen as a part of an overall approach, and their predictions are not further examined. In this paper, we analyze the predictive performance of Gaussian processes (GP), deep Gaussian processes, extra-trees (ET), and Bayesian neural networks (BNN), considering four scenarios with 5 to 20 inputs. Building on this, we introduce and evaluate an iterative approach to efficiently select test cases. Our results show that regarding predictive performance, the appropriate selection of test cases is more important than the choice of metamodels. While their great flexibility allows BNNs to benefit from large amounts of data and to model even the most complex scenarios, less flexible models like GPs can convince with higher reliability. This implies that relevant test cases have to be explored using scalable virtual environments and flexible models so that more realistic test environments and more trustworthy models can be used for targeted testing and validation.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge