Privacy-Aware Compression for Federated Data Analysis

Paper and Code

Mar 15, 2022

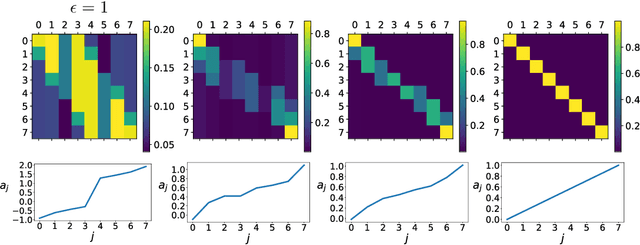

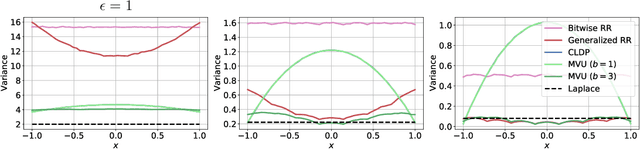

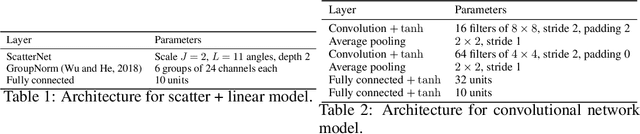

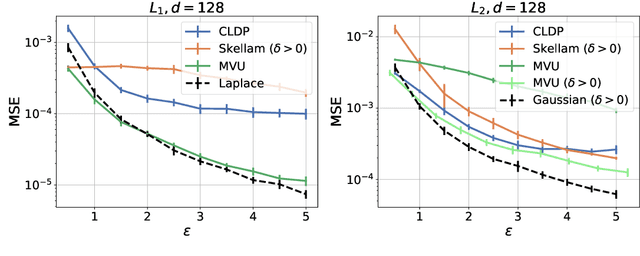

Federated data analytics is a framework for distributed data analysis where a server compiles noisy responses from a group of distributed low-bandwidth user devices to estimate aggregate statistics. Two major challenges in this framework are privacy, since user data is often sensitive, and compression, since the user devices have low network bandwidth. Prior work has addressed these challenges separately by combining standard compression algorithms with known privacy mechanisms. In this work, we take a holistic look at the problem and design a family of privacy-aware compression mechanisms that work for any given communication budget. We first propose a mechanism for transmitting a single real number that has optimal variance under certain conditions. We then show how to extend it to metric differential privacy for location privacy use-cases, as well as vectors, for application to federated learning. Our experiments illustrate that our mechanism can lead to better utility vs. compression trade-offs for the same privacy loss in a number of settings.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge