Principled Diverse Counterfactuals in Multilinear Models

Paper and Code

Jan 17, 2022

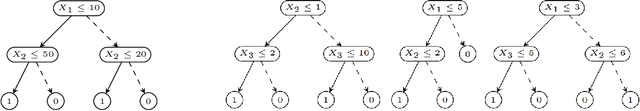

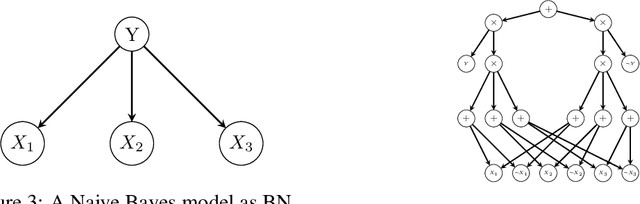

Machine learning (ML) applications have automated numerous real-life tasks, improving both private and public life. However, the black-box nature of many state-of-the-art models poses the challenge of model verification; how can one be sure that the algorithm bases its decisions on the proper criteria, or that it does not discriminate against certain minority groups? In this paper we propose a way to generate diverse counterfactual explanations from multilinear models, a broad class which includes Random Forests, as well as Bayesian Networks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge