Principal Boundary on Riemannian Manifolds

Paper and Code

Oct 21, 2017

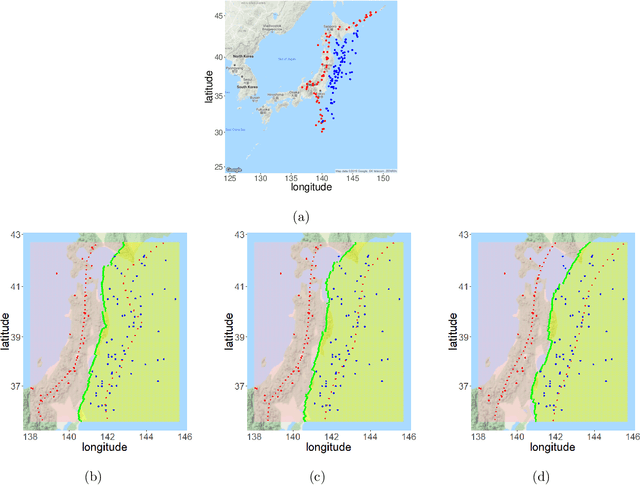

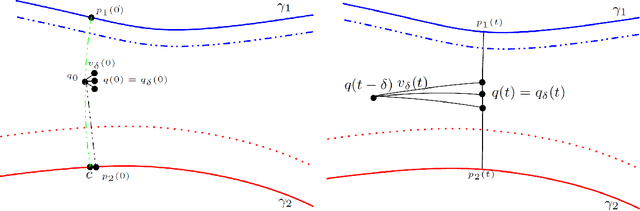

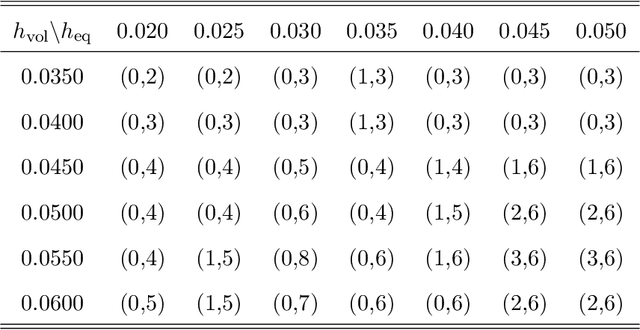

We revisit the classification problem and focus on nonlinear methods for classification on manifolds. For multivariate datasets lying on an embedded nonlinear Riemannian manifold within the higher-dimensional space, our aim is to acquire a classification boundary between the classes with labels. Motivated by the principal flow [Panaretos, Pham and Yao, 2014], a curve that moves along a path of the maximum variation of the data, we introduce the principal boundary. From the classification perspective, the principal boundary is defined as an optimal curve that moves in between the principal flows traced out from two classes of the data, and at any point on the boundary, it maximizes the margin between the two classes. We estimate the boundary in quality with its direction supervised by the two principal flows. We show that the principal boundary yields the usual decision boundary found by the support vector machine, in the sense that locally, the two boundaries coincide. By means of examples, we illustrate how to find, use and interpret the principal boundary.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge