Positional Encoding Augmented GAN for the Assessment of Wind Flow for Pedestrian Comfort in Urban Areas

Paper and Code

Jan 04, 2022

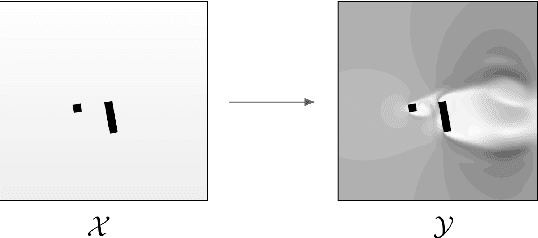

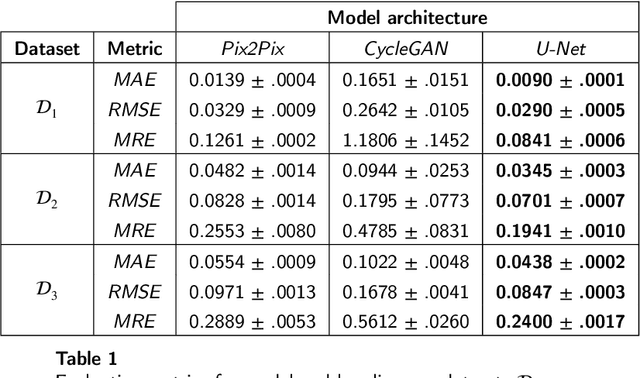

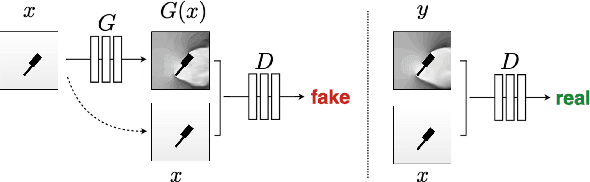

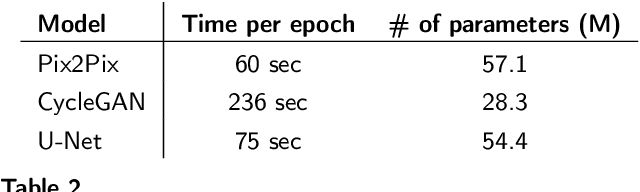

Approximating wind flows using computational fluid dynamics (CFD) methods can be time-consuming. Creating a tool for interactively designing prototypes while observing the wind flow change requires simpler models to simulate faster. Instead of running numerical approximations resulting in detailed calculations, data-driven methods and deep learning might be able to give similar results in a fraction of the time. This work rephrases the problem from computing 3D flow fields using CFD to a 2D image-to-image translation-based problem on the building footprints to predict the flow field at pedestrian height level. We investigate the use of generative adversarial networks (GAN), such as Pix2Pix [1] and CycleGAN [2] representing state-of-the-art for image-to-image translation task in various domains as well as U-Net autoencoder [3]. The models can learn the underlying distribution of a dataset in a data-driven manner, which we argue can help the model learn the underlying Reynolds-averaged Navier-Stokes (RANS) equations from CFD. We experiment on novel simulated datasets on various three-dimensional bluff-shaped buildings with and without height information. Moreover, we present an extensive qualitative and quantitative evaluation of the generated images for a selection of models and compare their performance with the simulations delivered by CFD. We then show that adding positional data to the input can produce more accurate results by proposing a general framework for injecting such information on the different architectures. Furthermore, we show that the models performances improve by applying attention mechanisms and spectral normalization to facilitate stable training.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge