PointConvFormer: Revenge of the Point-based Convolution

Paper and Code

Aug 04, 2022

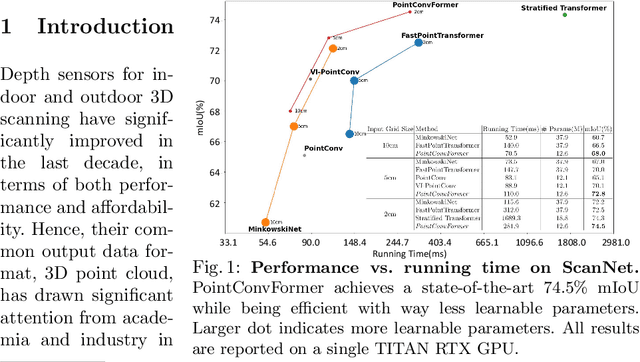

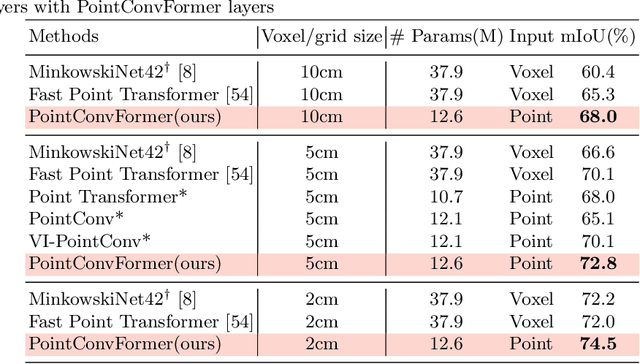

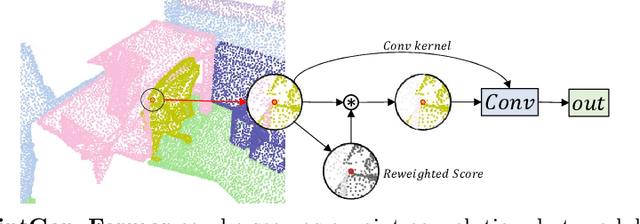

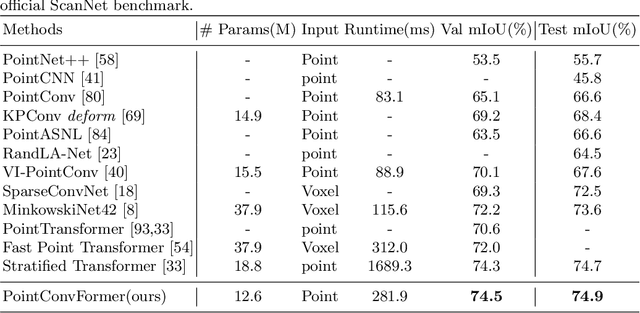

We introduce PointConvFormer, a novel building block for point cloud based deep neural network architectures. Inspired by generalization theory, PointConvFormer combines ideas from point convolution, where filter weights are only based on relative position, and Transformers which utilizes feature-based attention. In PointConvFormer, feature difference between points in the neighborhood serves as an indicator to re-weight the convolutional weights. Hence, we preserved the invariances from the point convolution operation whereas attention is used to select relevant points in the neighborhood for convolution. To validate the effectiveness of PointConvFormer, we experiment on both semantic segmentation and scene flow estimation tasks on point clouds with multiple datasets including ScanNet, SemanticKitti, FlyingThings3D and KITTI. Our results show that PointConvFormer substantially outperforms classic convolutions, regular transformers, and voxelized sparse convolution approaches with smaller, more computationally efficient networks. Visualizations show that PointConvFormer performs similarly to convolution on flat surfaces, whereas the neighborhood selection effect is stronger on object boundaries, showing that it got the best of both worlds.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge