Phonetic Ambiguity : Approaches, Touchstones, Pitfalls and New Approaches

Paper and Code

Aug 29, 1996

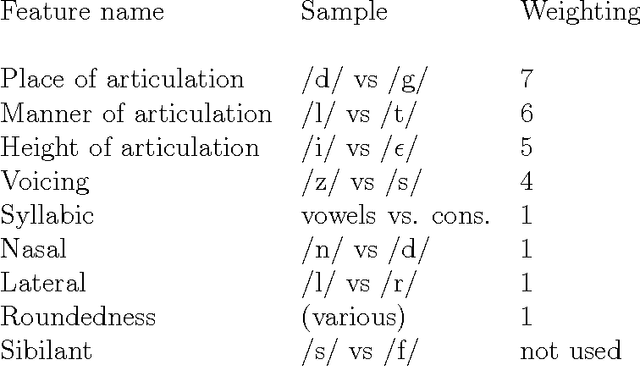

Phonetic ambiguity and confusibility are bugbears for any form of bottom-up or data-driven approach to language processing. The question of when an input is ``close enough'' to a target word pervades the entire problem spaces of speech recognition, synthesis, language acquisition, speech compression, and language representation, but the variety of representations that have been applied are demonstrably inadequate to at least some aspects of the problem. This paper reviews this inadequacy by examining several touchstone models in phonetic ambiguity and relating them to the problems they were designed to solve. An good solution would be, among other things, efficient, accurate, precise, and universally applicable to representation of words, ideally usable as a ``phonetic distance'' metric for direct measurement of the ``distance'' between word or utterance pairs. None of the proposed models can provide a complete solution to the problem; in general, there is no algorithmic theory of phonetic distance. It is unclear whether this is a weakness of our representational technology or a more fundamental difficulty with the problem statement.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge