Performance analysis of greedy algorithms for minimising a Maximum Mean Discrepancy

Paper and Code

Jan 19, 2021

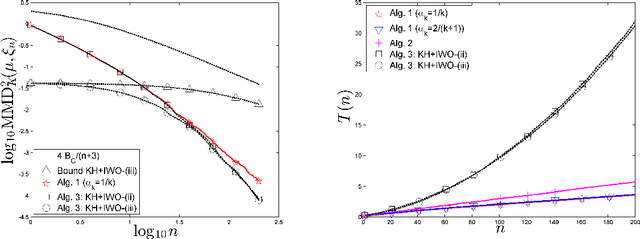

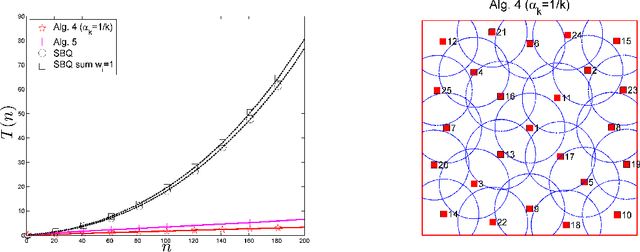

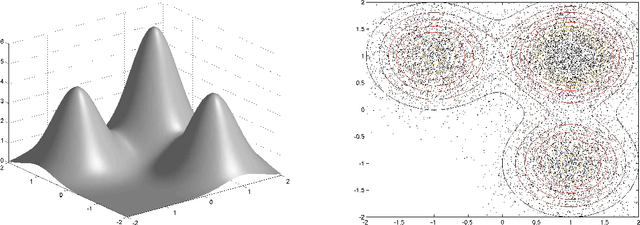

We analyse the performance of several iterative algorithms for the quantisation of a probability measure $\mu$, based on the minimisation of a Maximum Mean Discrepancy (MMD). Our analysis includes kernel herding, greedy MMD minimisation and Sequential Bayesian Quadrature (SBQ). We show that the finite-sample-size approximation error, measured by the MMD, decreases as $1/n$ for SBQ and also for kernel herding and greedy MMD minimisation when using a suitable step-size sequence. The upper bound on the approximation error is slightly better for SBQ, but the other methods are significantly faster, with a computational cost that increases only linearly with the number of points selected. This is illustrated by two numerical examples, with the target measure $\mu$ being uniform (a space-filling design application) and with $\mu$ a Gaussian mixture.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge