Perceptual Piercing: Human Visual Cue-based Object Detection in Low Visibility Conditions

Paper and Code

Oct 02, 2024

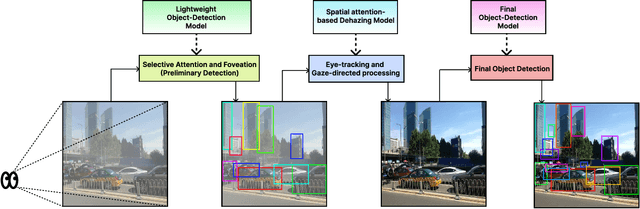

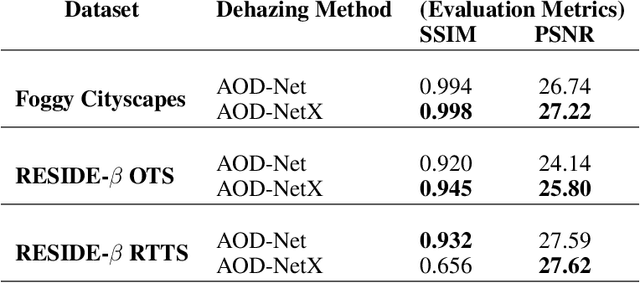

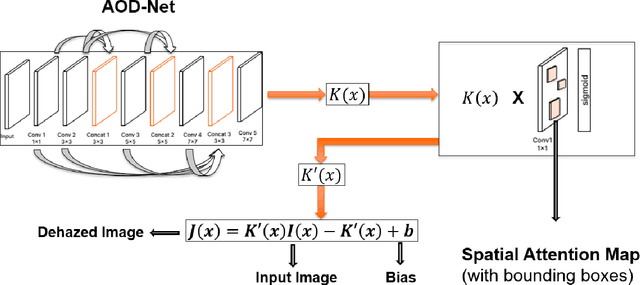

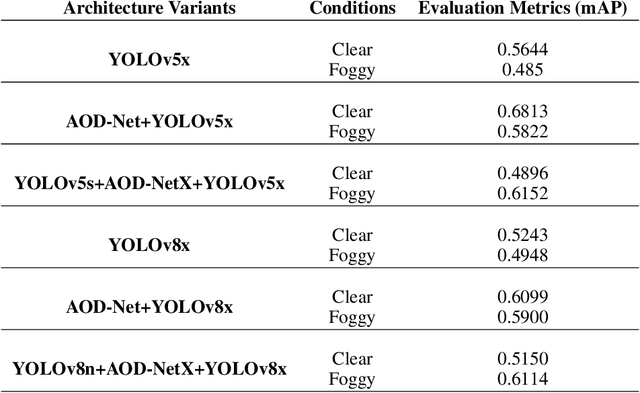

This study proposes a novel deep learning framework inspired by atmospheric scattering and human visual cortex mechanisms to enhance object detection under poor visibility scenarios such as fog, smoke, and haze. These conditions pose significant challenges for object recognition, impacting various sectors, including autonomous driving, aviation management, and security systems. The objective is to enhance the precision and reliability of detection systems under adverse environmental conditions. The research investigates the integration of human-like visual cues, particularly focusing on selective attention and environmental adaptability, to ascertain their impact on object detection's computational efficiency and accuracy. This paper proposes a multi-tiered strategy that integrates an initial quick detection process, followed by targeted region-specific dehazing, and concludes with an in-depth detection phase. The approach is validated using the Foggy Cityscapes, RESIDE-beta (OTS and RTTS) datasets and is anticipated to set new performance standards in detection accuracy while significantly optimizing computational efficiency. The findings offer a viable solution for enhancing object detection in poor visibility and contribute to the broader understanding of integrating human visual principles into deep learning algorithms for intricate visual recognition challenges.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge