Patch-based Texture Synthesis for Image Inpainting

Paper and Code

May 05, 2016

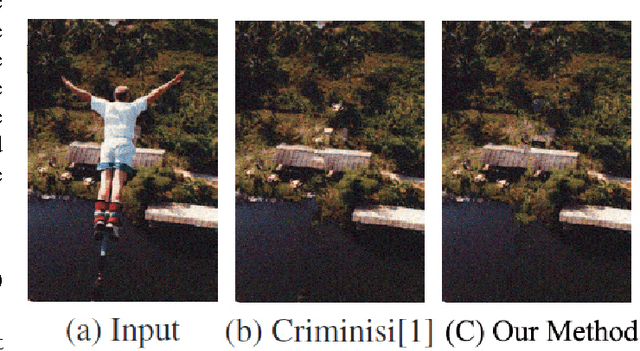

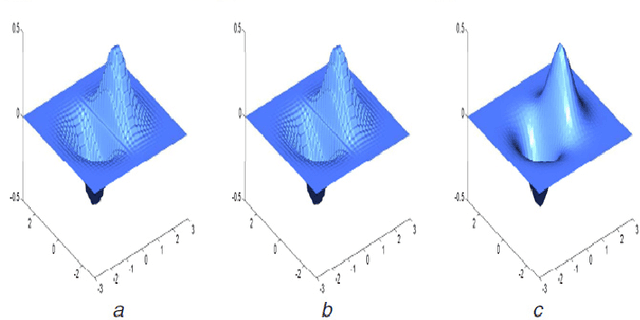

Image inpaiting is an important task in image processing and vision. In this paper, we develop a general method for patch-based image inpainting by synthesizing new textures from existing one. A novel framework is introduced to find several optimal candidate patches and generate a new texture patch in the process. We form it as an optimization problem that identifies the potential patches for synthesis from an coarse-to-fine manner. We use the texture descriptor as a clue in searching for matching patches from the known region. To ensure the structure faithful to the original image, a geometric constraint metric is formally defined that is applied directly to the patch synthesis procedure. We extensively conducted our experiments on a wide range of testing images on various scenarios and contents by arbitrarily specifying the target the regions for inference followed by using existing evaluation metrics to verify its texture coherency and structural consistency. Our results demonstrate the high accuracy and desirable output that can be potentially used for numerous applications: object removal, background subtraction, and image retrieval.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge