Pareto-Optimal Bit Allocation for Collaborative Intelligence

Paper and Code

Sep 25, 2020

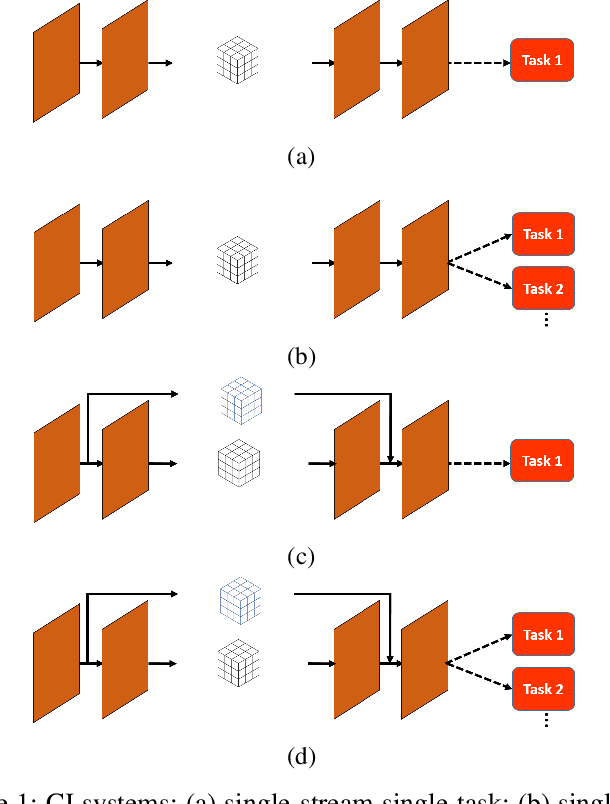

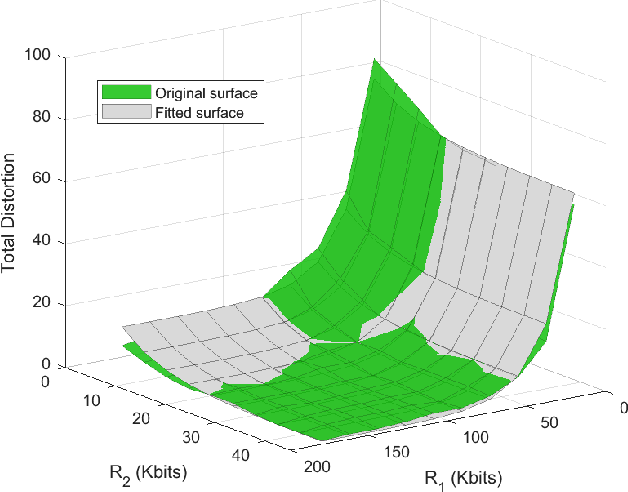

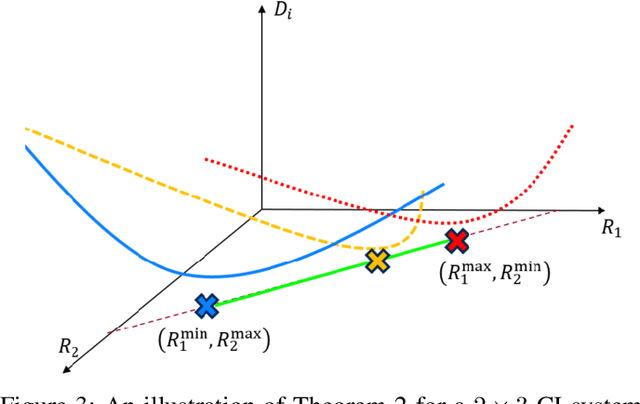

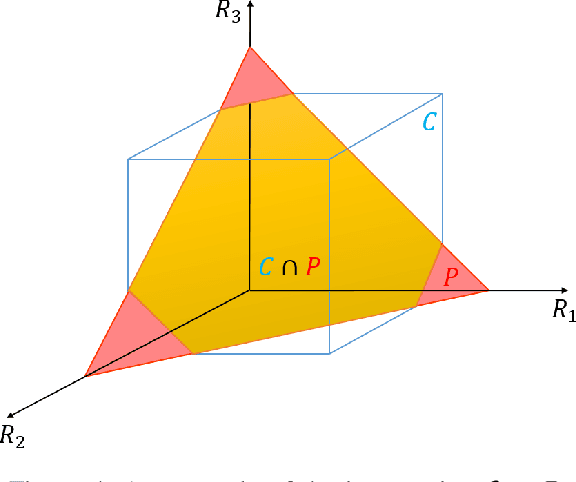

In recent studies, collaborative intelligence (CI) has emerged as a promising framework for deployment of Artificial Intelligence (AI)-based services on mobile/edge devices. In CI, the AI model (a deep neural network) is split between the edge and the cloud, and intermediate features are sent from the edge sub-model to the cloud sub-model. In this paper, we study bit allocation for feature coding in multi-stream CI systems. We model task distortion as a function of rate using convex surfaces similar to those found in distortion-rate theory. Using such models, we are able to provide closed-form bit allocation solutions for single-task systems and scalarized multi-task systems. Moreover, we provide analytical characterization of the full Pareto set for 2-stream k-task systems, and bounds on the Pareto set for 3-stream 2-task systems. Analytical results are examined on a variety of DNN models from the literature to demonstrate wide applicability of the results

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge