OpenGAN: Open Set Generative Adversarial Networks

Paper and Code

Mar 18, 2020

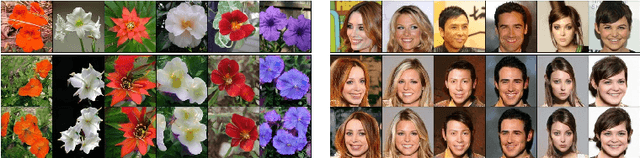

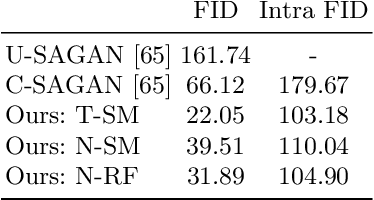

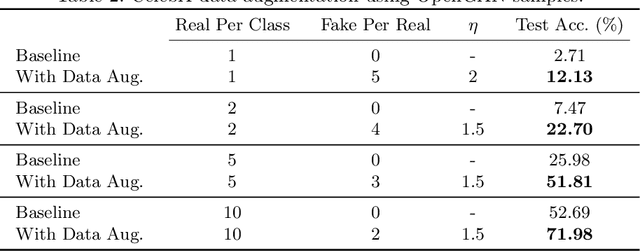

Many existing conditional Generative Adversarial Networks (cGANs) are limited to conditioning on pre-defined and fixed class-level semantic labels or attributes. We propose an open set GAN architecture (OpenGAN) that is conditioned per-input sample with a feature embedding drawn from a metric space. Using a state-of-the-art metric learning model that encodes both class-level and fine-grained semantic information, we are able to generate samples that are semantically similar to a given source image. The semantic information extracted by the metric learning model transfers to out-of-distribution novel classes, allowing the generative model to produce samples that are outside of the training distribution. We show that our proposed method is able to generate 256$\times$256 resolution images from novel classes that are of similar visual quality to those from the training classes. In lieu of a source image, we demonstrate that random sampling of the metric space also results in high-quality samples. We show that interpolation in the feature space and latent space results in semantically and visually plausible transformations in the image space. Finally, the usefulness of the generated samples to the downstream task of data augmentation is demonstrated. We show that classifier performance can be significantly improved by augmenting the training data with OpenGAN samples on classes that are outside of the GAN training distribution.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge