Ontology-Enhanced Decision-Making for Autonomous Agents in Dynamic and Partially Observable Environments

Paper and Code

May 27, 2024

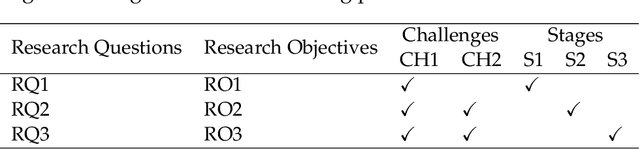

Agents, whether software or hardware, perceive their environment through sensors and act using actuators, often operating in dynamic, partially observable settings. They face challenges like incomplete and noisy data, unforeseen situations, and the need to adapt goals in real-time. Traditional reasoning and ML methods, including Reinforcement Learning (RL), help but are limited by data needs, predefined goals, and extensive exploration periods. Ontologies offer a solution by integrating diverse information sources, enhancing decision-making in complex environments. This thesis introduces an ontology-enhanced decision-making model (OntoDeM) for autonomous agents. OntoDeM enriches agents' domain knowledge, allowing them to interpret unforeseen events, generate or adapt goals, and make better decisions. Key contributions include: 1. An ontology-based method to improve agents' real-time observations using prior knowledge. 2. The OntoDeM model for handling dynamic, unforeseen situations by evolving or generating new goals. 3. Implementation and evaluation in four real-world applications, demonstrating its effectiveness. Compared to traditional and advanced learning algorithms, OntoDeM shows superior performance in improving agents' observations and decision-making in dynamic, partially observable environments.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge