On the Implicit Biases of Architecture & Gradient Descent

Paper and Code

Oct 08, 2021

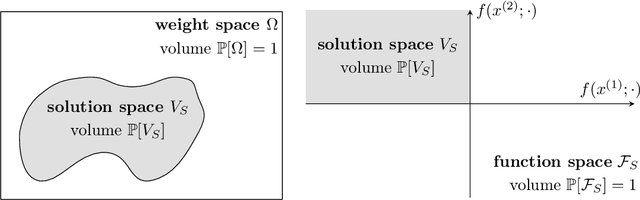

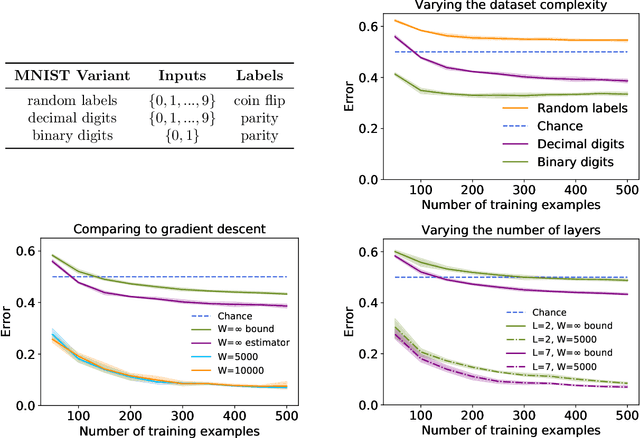

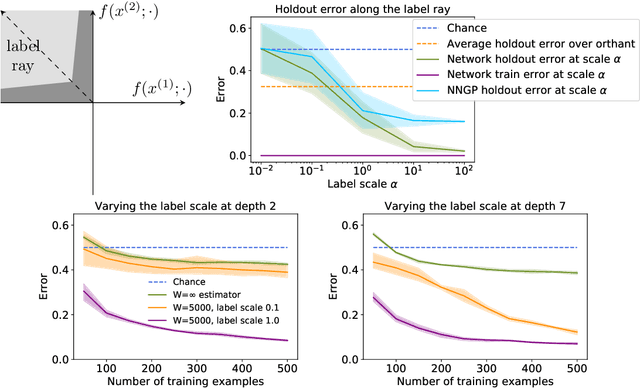

Do neural networks generalise because of bias in the functions returned by gradient descent, or bias already present in the network architecture? Por qu\'e no los dos? This paper finds that while typical networks that fit the training data already generalise fairly well, gradient descent can further improve generalisation by selecting networks with a large margin. This conclusion is based on a careful study of the behaviour of infinite width networks trained by Bayesian inference and finite width networks trained by gradient descent. To measure the implicit bias of architecture, new technical tools are developed to both analytically bound and consistently estimate the average test error of the neural network--Gaussian process (NNGP) posterior. This error is found to be already better than chance, corroborating the findings of Valle-P\'erez et al. (2019) and underscoring the importance of architecture. Going beyond this result, this paper finds that test performance can be substantially improved by selecting a function with much larger margin than is typical under the NNGP posterior. This highlights a curious fact: minimum a posteriori functions can generalise best, and gradient descent can select for those functions. In summary, new technical tools suggest a nuanced portrait of generalisation involving both the implicit biases of architecture and gradient descent. Code for this paper is available at: https://github.com/jxbz/implicit-bias/.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge