On the Achievable SINR in MU-MIMO Systems Operating in Time-Varying Rayleigh Fading

Paper and Code

Mar 24, 2022

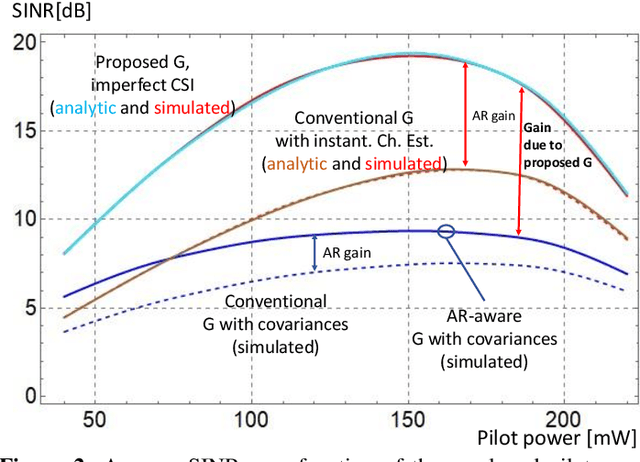

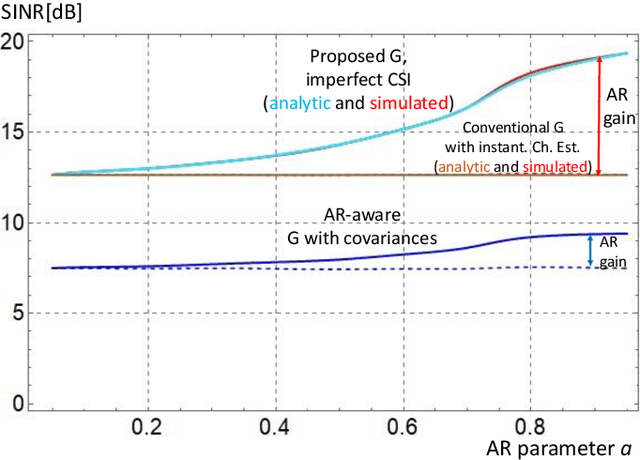

Minimizing the symbol error in the uplink of multi-user multiple input multiple output systems is important, because the symbol error affects the achieved signal-to-interference-plus-noise ratio (SINR) and thereby the spectral efficiency of the system. Despite the vast literature available on minimum mean squared error (MMSE) receivers, previously proposed receivers for block fading channels do not minimize the symbol error in time-varying Rayleigh fading channels. Specifically, we show that the true MMSE receiver structure does not only depend on the statistics of the CSI error, but also on the autocorrelation coefficient of the time-variant channel. It turns out that calculating the average SINR when using the proposed receiver is highly non-trivial. In this paper, we employ a random matrix theoretical approach, which allows us to derive a quasi-closed form for the average SINR, which allows to obtain analytical exact results that give valuable insights into how the SINR depends on the number of antennas, employed pilot and data power and the covariance of the time-varying channel. We benchmark the performance of the proposed receiver against recently proposed receivers and find that the proposed MMSE receiver achieves higher SINR than the previously proposed ones, and this benefit increases with increasing autoregressive coefficient.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge