On reducing the order of arm-passes bandit streaming algorithms under memory bottleneck

Paper and Code

Nov 30, 2021

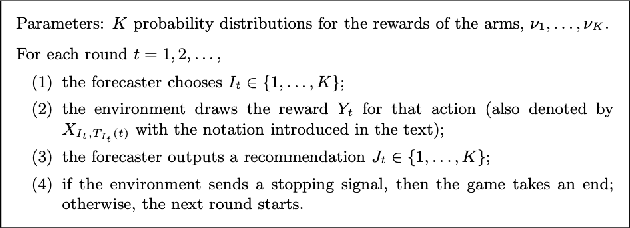

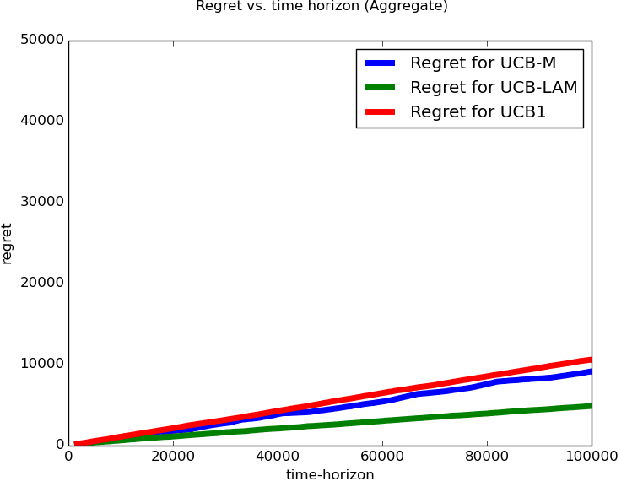

In this work we explore multi-arm bandit streaming model, especially in cases where the model faces resource bottleneck. We build over existing algorithms conditioned by limited arm memory at any instance of time. Specifically, we improve the amount of streaming passes it takes for a bandit algorithm to incur a $O(\sqrt{T\log(T)})$ regret by a logarithmic factor, and also provide 2-pass algorithms with some initial conditions to incur a similar order of regret.

* 15 pages, 2 figures. arXiv admin note: text overlap with

arXiv:1901.08387 by other authors without attribution

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge