On Outer Bi-Lipschitz Extensions of Linear Johnson-Lindenstrauss Embeddings of Low-Dimensional Submanifolds of $\mathbb{R}^N$

Paper and Code

Jun 07, 2022

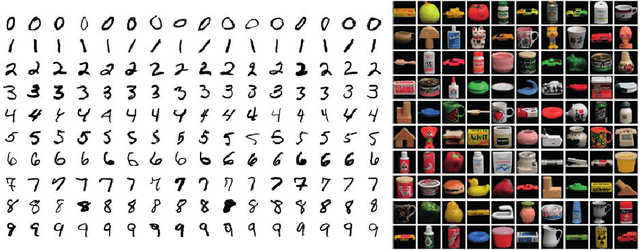

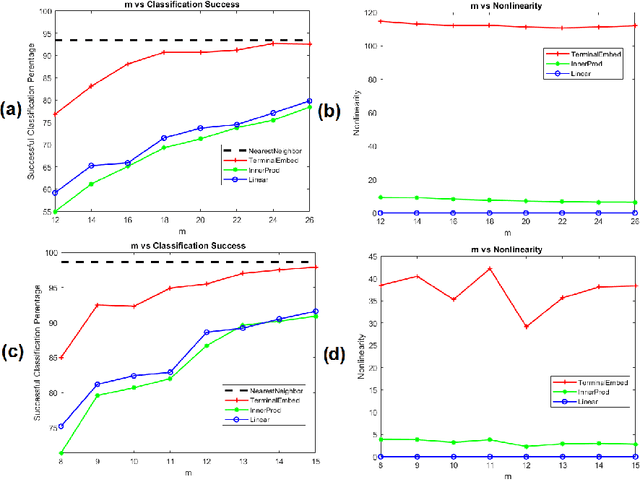

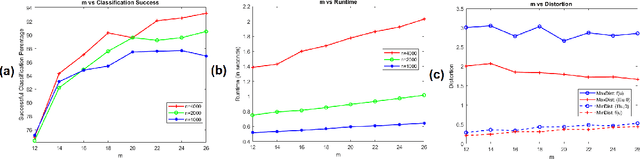

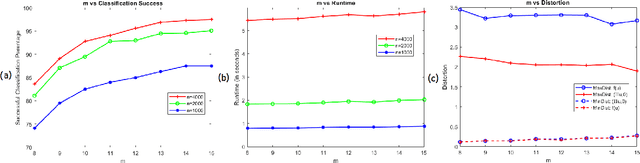

Let $\mathcal{M}$ be a compact $d$-dimensional submanifold of $\mathbb{R}^N$ with reach $\tau$ and volume $V_{\mathcal M}$. Fix $\epsilon \in (0,1)$. In this paper we prove that a nonlinear function $f: \mathbb{R}^N \rightarrow \mathbb{R}^{m}$ exists with $m \leq C \left(d / \epsilon^2 \right) \log \left(\frac{\sqrt[d]{V_{\mathcal M}}}{\tau} \right)$ such that $$(1 - \epsilon) \| {\bf x} - {\bf y} \|_2 \leq \left\| f({\bf x}) - f({\bf y}) \right\|_2 \leq (1 + \epsilon) \| {\bf x} - {\bf y} \|_2$$ holds for all ${\bf x} \in \mathcal{M}$ and ${\bf y} \in \mathbb{R}^N$. In effect, $f$ not only serves as a bi-Lipschitz function from $\mathcal{M}$ into $\mathbb{R}^{m}$ with bi-Lipschitz constants close to one, but also approximately preserves all distances from points not in $\mathcal{M}$ to all points in $\mathcal{M}$ in its image. Furthermore, the proof is constructive and yields an algorithm which works well in practice. In particular, it is empirically demonstrated herein that such nonlinear functions allow for more accurate compressive nearest neighbor classification than standard linear Johnson-Lindenstrauss embeddings do in practice.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge