On How Users Edit Computer-Generated Visual Stories

Paper and Code

Mar 08, 2019

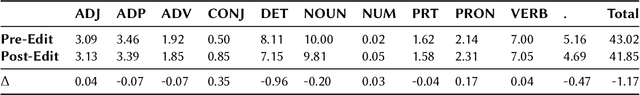

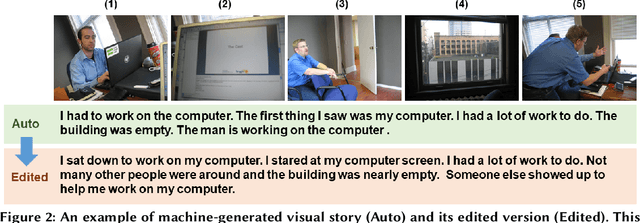

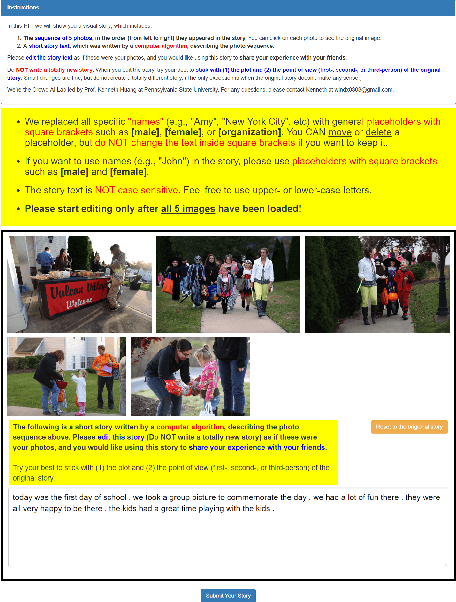

A significant body of research in Artificial Intelligence (AI) has focused on generating stories automatically, either based on prior story plots or input images. However, literature has little to say about how users would receive and use these stories. Given the quality of stories generated by modern AI algorithms, users will nearly inevitably have to edit these stories before putting them to real use. In this paper, we present the first analysis of how human users edit machine-generated stories. We obtained 962 short stories generated by one of the state-of-the-art visual storytelling models. For each story, we recruited five crowd workers from Amazon Mechanical Turk to edit it. Our analysis of these edits shows that, on average, users (i) slightly shortened machine-generated stories, (ii) increased lexical diversity in these stories, and (iii) often replaced nouns and their determiners/articles with pronouns. Our study provides a better understanding on how users receive and edit machine-generated stories,informing future researchers to create more usable and helpful story generation systems.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge