On Error Correction Neural Networks for Economic Forecasting

Paper and Code

Apr 11, 2020

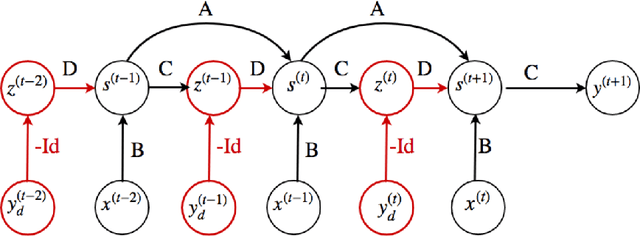

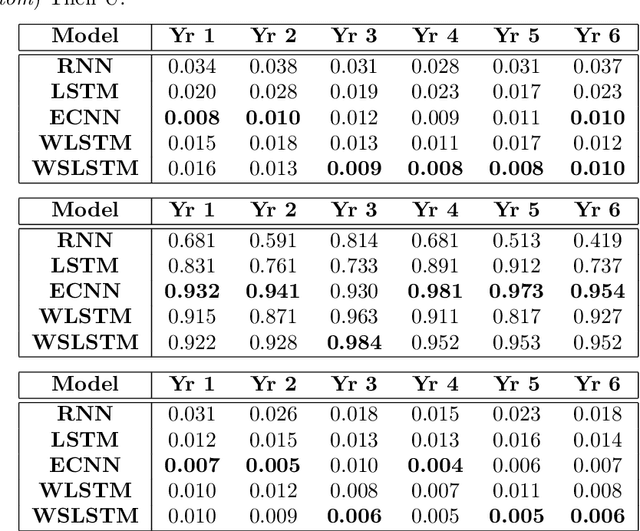

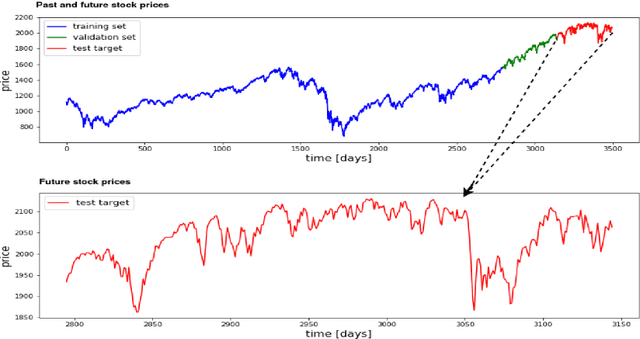

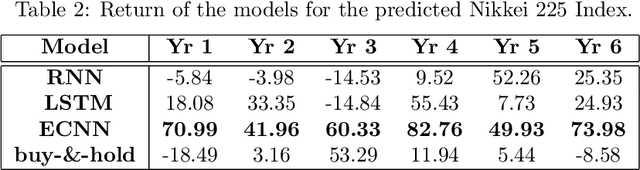

Recurrent neural networks (RNNs) are more suitable for learning non-linear dependencies in dynamical systems from observed time series data. In practice all the external variables driving such systems are not know a priori, especially in economical forecasting. A class of RNNs called Error Correction Neural Networks (ECNNs) was designed to compensate for missing input variables. It does this by feeding back in the current step the error made in the previous step. The ECNN is implemented in Python by the computation of the appropriate gradients and it is tested on stock market predictions. As expected it out performed the simple RNN and LSTM and other hybrid models which involve a de-noising pre-processing step. The intuition for the latter is that de-noising may lead to loss of information.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge