On Efficient Real-Time Semantic Segmentation: A Survey

Paper and Code

Jun 17, 2022

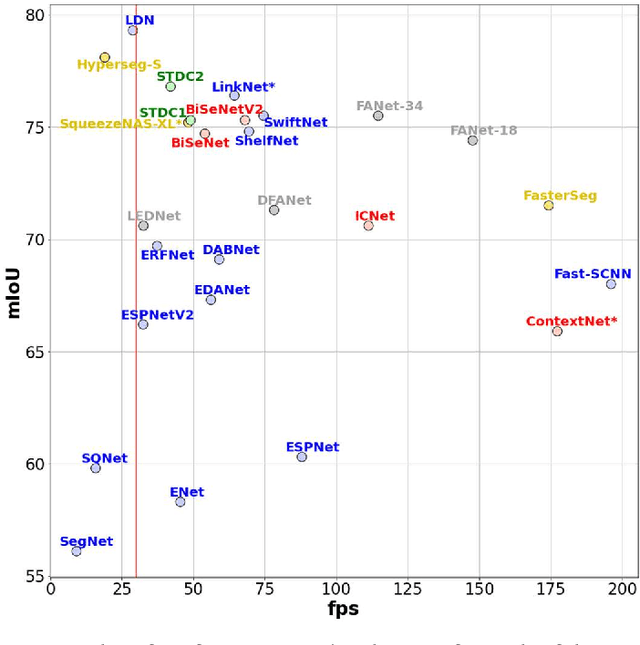

Semantic segmentation is the problem of assigning a class label to every pixel in an image, and is an important component of an autonomous vehicle vision stack for facilitating scene understanding and object detection. However, many of the top performing semantic segmentation models are extremely complex and cumbersome, and as such are not suited to deployment onboard autonomous vehicle platforms where computational resources are limited and low-latency operation is a vital requirement. In this survey, we take a thorough look at the works that aim to address this misalignment with more compact and efficient models capable of deployment on low-memory embedded systems while meeting the constraint of real-time inference. We discuss several of the most prominent works in the field, placing them within a taxonomy based on their major contributions, and finally we evaluate the inference speed of the discussed models under consistent hardware and software setups that represent a typical research environment with high-end GPU and a realistic deployed scenario using low-memory embedded GPU hardware. Our experimental results demonstrate that many works are capable of real-time performance on resource-constrained hardware, while illustrating the consistent trade-off between latency and accuracy.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge