Offline Deep Reinforcement Learning for Dynamic Pricing of Consumer Credit

Paper and Code

Mar 06, 2022

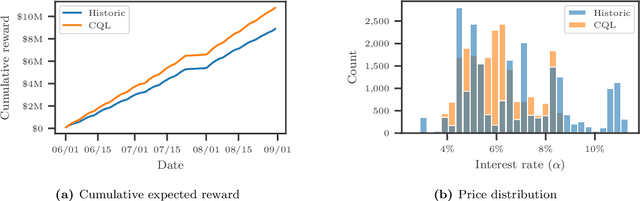

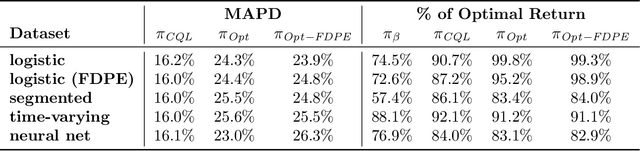

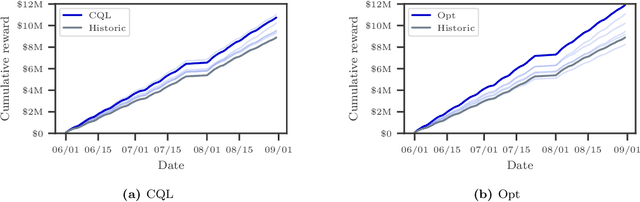

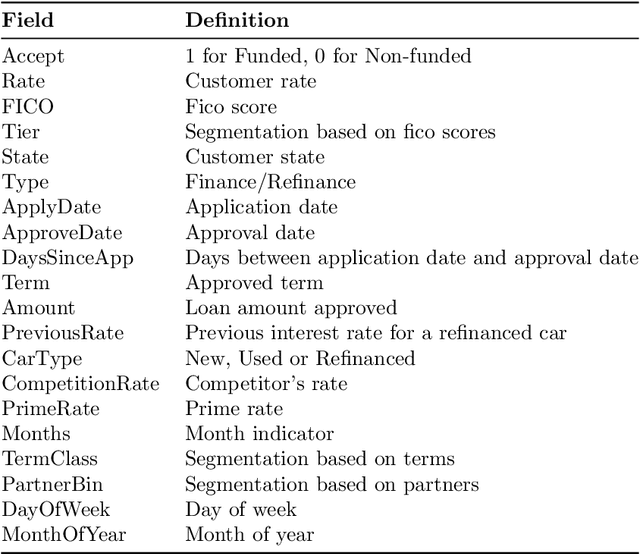

We introduce a method for pricing consumer credit using recent advances in offline deep reinforcement learning. This approach relies on a static dataset and requires no assumptions on the functional form of demand. Using both real and synthetic data on consumer credit applications, we demonstrate that our approach using the conservative Q-Learning algorithm is capable of learning an effective personalized pricing policy without any online interaction or price experimentation.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge