Occlusion-Robust MVO: Multimotion Estimation Through Occlusion Via Motion Closure

Paper and Code

May 13, 2019

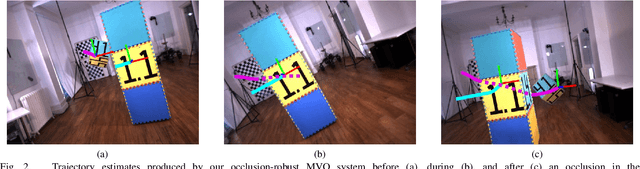

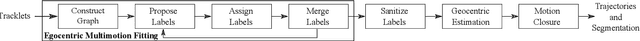

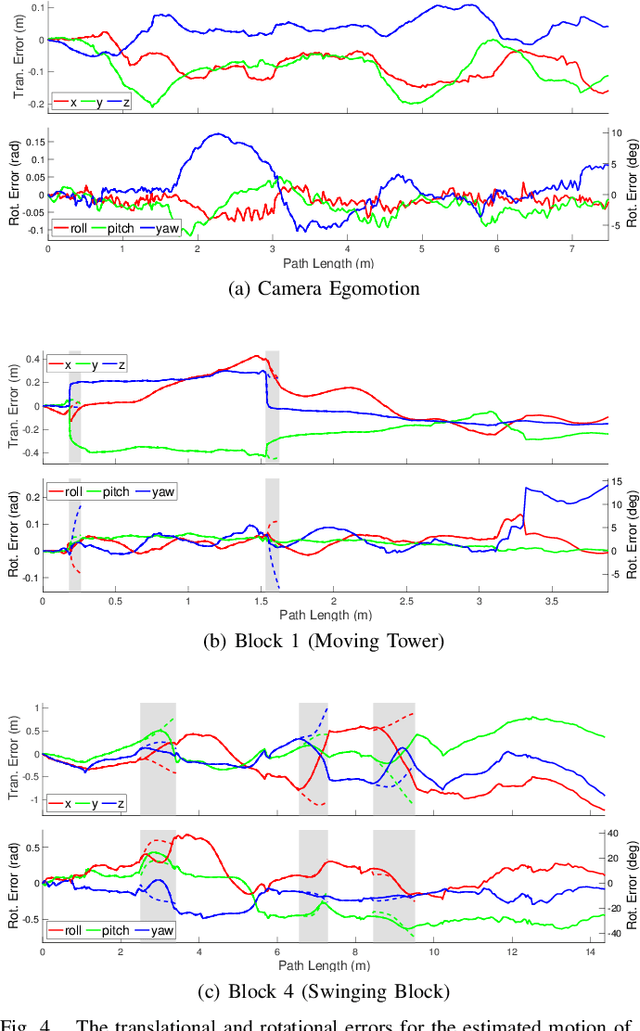

Visual motion estimation is an integral and well-studied challenge in autonomous navigation. Recent work has focused on addressing multimotion estimation, which is especially challenging in highly dynamic environments. Such environments not only comprise multiple, complex motions but also tend to exhibit significant occlusion. Previous work in multiple object tracking focuses on maintaining the integrity of object tracks but usually relies on specific appearance-based descriptors or constrained motion models. These approaches are very effective in specific applications but do not generalize to the full multimotion estimation problem. This paper extends the multimotion visual odometry (MVO) pipeline to estimate multiple motions through occlusion, including the camera egomotion, by employing physically founded motion priors. This allows the pipeline to consistently estimate the full trajectory of every motion in a scene and recognize when temporarily occluded motions become unoccluded. The estimation performance of the pipeline is evaluated on real-world data from the Oxford Multimotion Dataset.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge