Object Recognition in Different Lighting Conditions at Various Angles by Deep Learning Method

Paper and Code

Oct 18, 2022

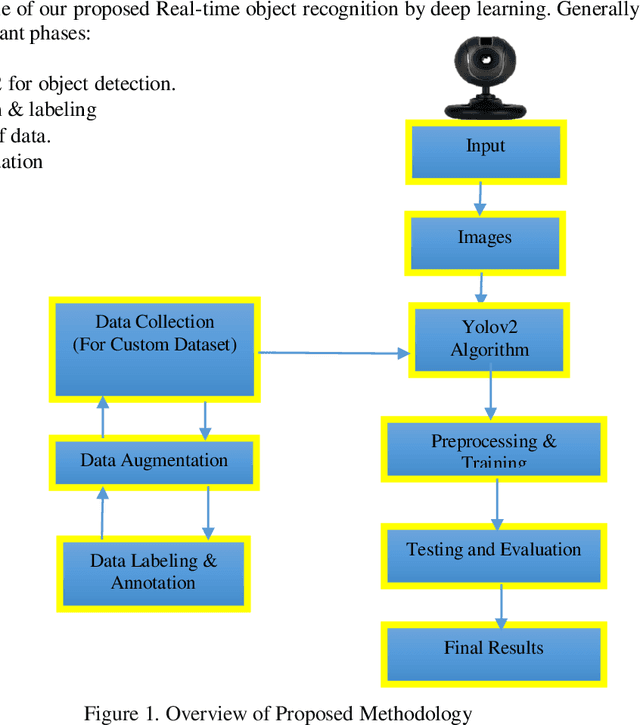

Existing computer vision and object detection methods strongly rely on neural networks and deep learning. This active research area is used for applications such as autonomous driving, aerial photography, protection, and monitoring. Futuristic object detection methods rely on rectangular, boundary boxes drawn over an object to accurately locate its location. The modern object recognition algorithms, however, are vulnerable to multiple factors, such as illumination, occlusion, viewing angle, or camera rotation as well as cost. Therefore, deep learning-based object recognition will significantly increase the recognition speed and compatible external interference. In this study, we use convolutional neural networks (CNN) to recognize items, the neural networks have the advantages of end-to-end, sparse relation, and sharing weights. This article aims to classify the name of the various object based on the position of an object's detected box. Instead, under different distances, we can get recognition results with different confidence. Through this study, we find that this model's accuracy through recognition is mainly influenced by the proportion of objects and the number of samples. When we have a small proportion of an object on camera, then we get higher recognition accuracy; if we have a much small number of samples, we can get greater accuracy in recognition. The epidemic has a great impact on the world economy where designing a cheaper object recognition system is the need of time.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge