Normalizing Flows for Conformal Regression

Paper and Code

Jun 05, 2024

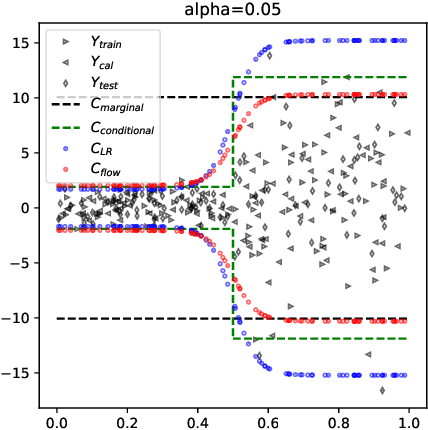

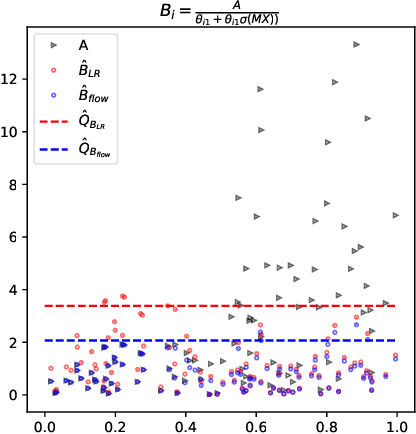

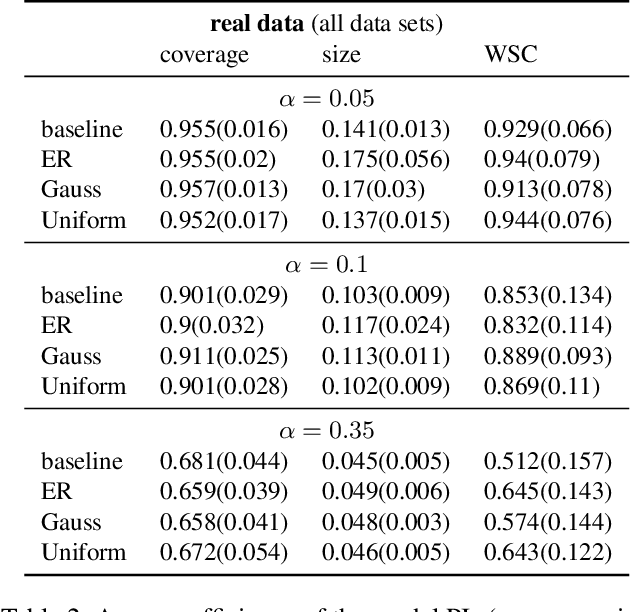

Conformal Prediction (CP) algorithms estimate the uncertainty of a prediction model by calibrating its outputs on labeled data. The same calibration scheme usually applies to any model and data without modifications. The obtained prediction intervals are valid by construction but could be inefficient, i.e. unnecessarily big, if the prediction errors are not uniformly distributed over the input space. We present a general scheme to localize the intervals by training the calibration process. The standard prediction error is replaced by an optimized distance metric that depends explicitly on the object attributes. Learning the optimal metric is equivalent to training a Normalizing Flow that acts on the joint distribution of the errors and the inputs. Unlike the Error Re-weighting CP algorithm of Papadopoulos et al. (2008), the framework allows estimating the gap between nominal and empirical conditional validity. The approach is compatible with existing locally-adaptive CP strategies based on re-weighting the calibration samples and applies to any point-prediction model without retraining.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge