No Modes left behind: Capturing the data distribution effectively using GANs

Paper and Code

Feb 02, 2018

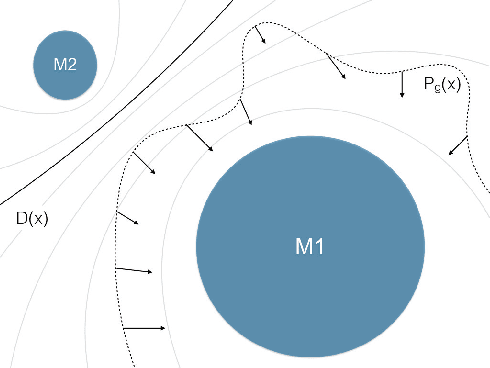

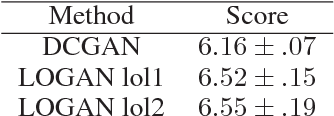

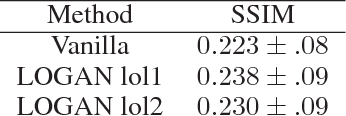

Generative adversarial networks (GANs) while being very versatile in realistic image synthesis, still are sensitive to the input distribution. Given a set of data that has an imbalance in the distribution, the networks are susceptible to missing modes and not capturing the data distribution. While various methods have been tried to improve training of GANs, these have not addressed the challenges of covering the full data distribution. Specifically, a generator is not penalized for missing a mode. We show that these are therefore still susceptible to not capturing the full data distribution. In this paper, we propose a simple approach that combines an encoder based objective with novel loss functions for generator and discriminator that improves the solution in terms of capturing missing modes. We validate that the proposed method results in substantial improvements through its detailed analysis on toy and real datasets. The quantitative and qualitative results demonstrate that the proposed method improves the solution for the problem of missing modes and improves training of GANs.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge