Neural Tangent Kernel of Matrix Product States: Convergence and Applications

Paper and Code

Nov 28, 2021

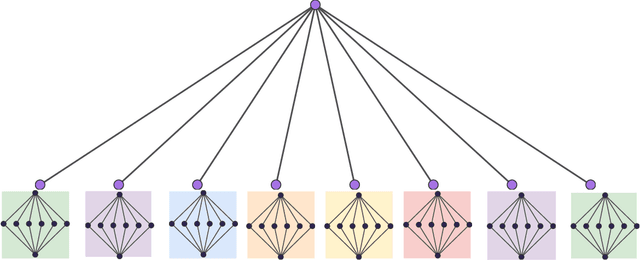

In this work, we study the Neural Tangent Kernel (NTK) of Matrix Product States (MPS) and the convergence of its NTK in the infinite bond dimensional limit. We prove that the NTK of MPS asymptotically converges to a constant matrix during the gradient descent (training) process (and also the initialization phase) as the bond dimensions of MPS go to infinity by the observation that the variation of the tensors in MPS asymptotically goes to zero during training in the infinite limit. By showing the positive-definiteness of the NTK of MPS, the convergence of MPS during the training in the function space (space of functions represented by MPS) is guaranteed without any extra assumptions of the data set. We then consider the settings of (supervised) Regression with Mean Square Error (RMSE) and (unsupervised) Born Machines (BM) and analyze their dynamics in the infinite bond dimensional limit. The ordinary differential equations (ODEs) which describe the dynamics of the responses of MPS in the RMSE and BM are derived and solved in the closed-form. For the Regression, we consider Mercer Kernels (Gaussian Kernels) and find that the evolution of the mean of the responses of MPS follows the largest eigenvalue of the NTK. Due to the orthogonality of the kernel functions in BM, the evolution of different modes (samples) decouples and the "characteristic time" of convergence in training is obtained.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge