Neural Radiance Projection

Paper and Code

Mar 15, 2022

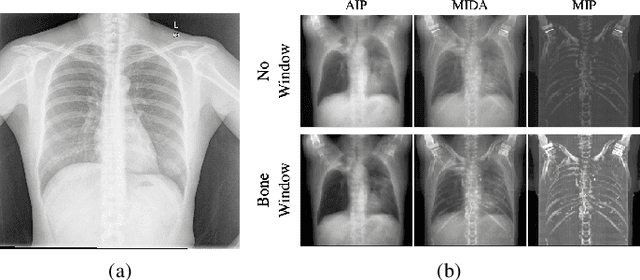

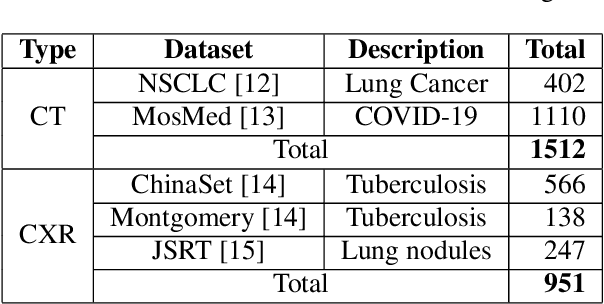

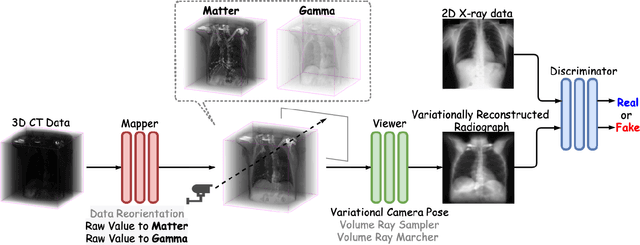

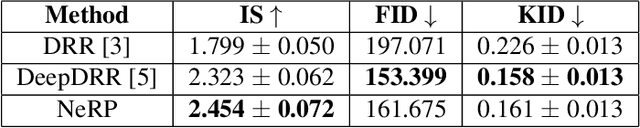

The proposed method, Neural Radiance Projection (NeRP), addresses the three most fundamental shortages of training such a convolutional neural network on X-ray image segmentation: dealing with missing/limited human-annotated datasets; ambiguity on the per-pixel label; and the imbalance across positive- and negative- classes distribution. By harnessing a generative adversarial network, we can synthesize a massive amount of physics-based X-ray images, so-called Variationally Reconstructed Radiographs (VRRs), alongside their segmentation from more accurate labeled 3D Computed Tomography data. As a result, VRRs present more faithfully than other projection methods in terms of photo-realistic metrics. Adding outputs from NeRP also surpasses the vanilla UNet models trained on the same pairs of X-ray images.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge