Neural Embeddings for Text

Paper and Code

Aug 17, 2022

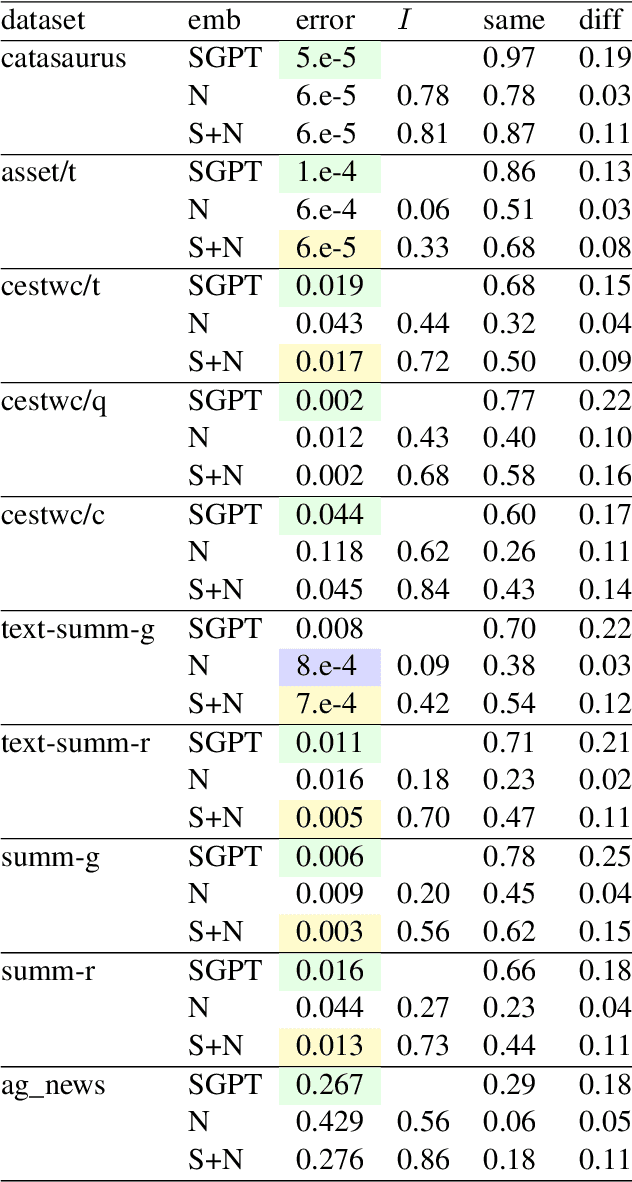

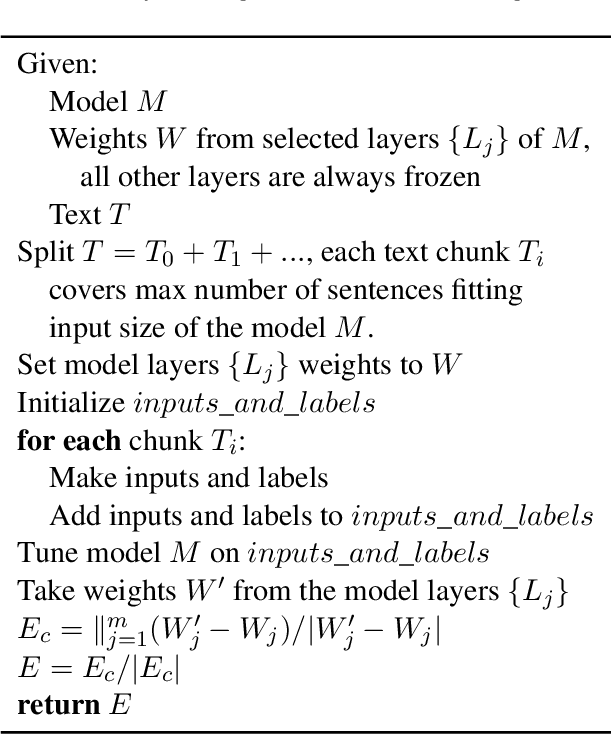

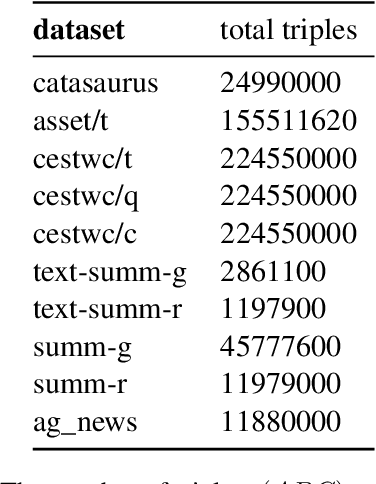

We propose a new kind of embedding for natural language text that deeply represents semantic meaning. Standard text embeddings use the vector output of a pretrained language model. In our method, we let a language model learn from the text and then literally pick its brain, taking the actual weights of the model's neurons to generate a vector. We call this representation of the text a neural embedding. The technique may generalize beyond text and language models, but we first explore its properties for natural language processing. We compare neural embeddings with GPT sentence (SGPT) embeddings on several datasets. We observe that neural embeddings achieve comparable performance with a far smaller model, and the errors are different.

* 7 pages, 4 figures, 4 tables

View paper on

OpenReview

OpenReview

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge