Neural Architecture Construction using EnvelopeNets

Paper and Code

May 22, 2018

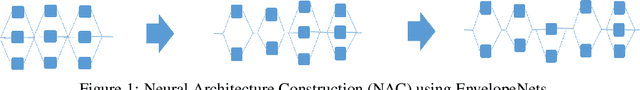

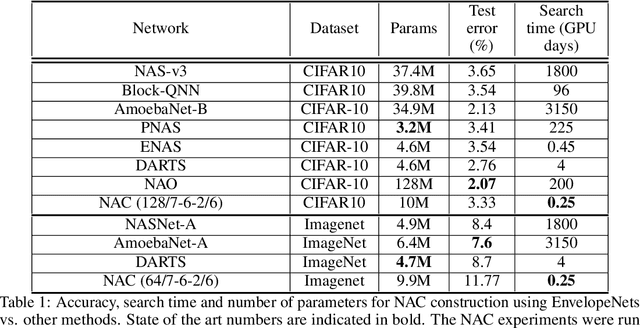

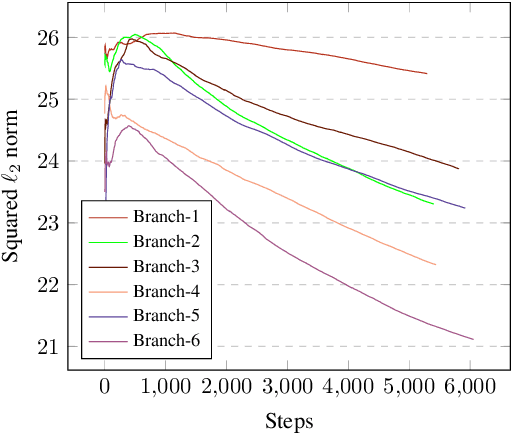

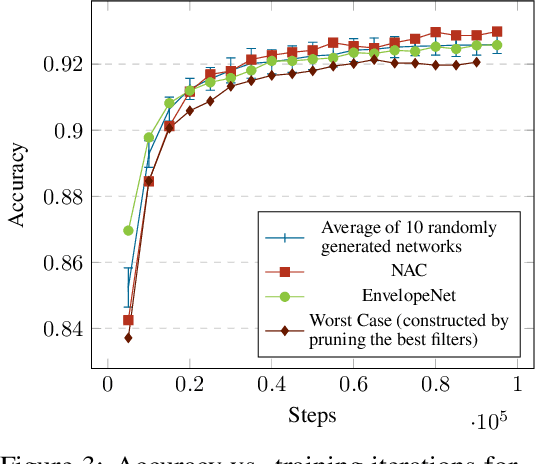

In recent years, several automated search methods for neural network architectures have been proposed using methods such as evolutionary algorithms and reinforcement learning. These methods use an objective function (usually accuracy) that is evaluated after a full training and evaluation cycle. We show that statistics derived from filter featuremaps reach a state where the utility of different filters within a network can be compared and hence can be used to construct networks. The training epochs needed for filters within a network to reach this state is much less than the training epochs needed for the accuracy of a network to stabilize. EnvelopeNets is a construction method that exploits this finding to design convolutional neural nets (CNNs) in a fraction of the time needed by conventional search methods. The constructed networks show close to state of the art performance on the image classification problem on well known datasets (CIFAR-10, ImageNet) and consistently show better performance than hand constructed and randomly generated networks of the same depth, operators and approximately the same number of parameters.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge