Nearly Tight Bounds for Cross-Learning Contextual Bandits with Graphical Feedback

Paper and Code

Feb 07, 2025

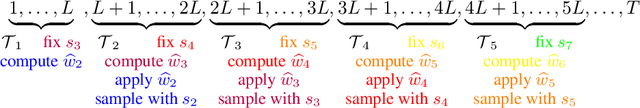

The cross-learning contextual bandit problem with graphical feedback has recently attracted significant attention. In this setting, there is a contextual bandit with a feedback graph over the arms, and pulling an arm reveals the loss for all neighboring arms in the feedback graph across all contexts. Initially proposed by Han et al. (2024), this problem has broad applications in areas such as bidding in first price auctions, and explores a novel frontier in the feedback structure of bandit problems. A key theoretical question is whether an algorithm with $\widetilde{O}(\sqrt{\alpha T})$ regret exists, where $\alpha$ represents the independence number of the feedback graph. This question is particularly interesting because it concerns whether an algorithm can achieve a regret bound entirely independent of the number of contexts and matching the minimax regret of vanilla graphical bandits. Previous work has demonstrated that such an algorithm is impossible for adversarial contexts, but the question remains open for stochastic contexts. In this work, we affirmatively answer this open question by presenting an algorithm that achieves the minimax $\widetilde{O}(\sqrt{\alpha T})$ regret for cross-learning contextual bandits with graphical feedback and stochastic contexts. Notably, although that question is open even for stochastic bandits, we directly solve the strictly stronger adversarial bandit version of the problem.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge